A Quick Look Back: The Evolution of AI

AI has been around since the 1950s, when researchers first envisioned machines that could "think." Early AI systems were rule-based and rigid, but the rise of machine learning and deep learning transformed AI into something far more adaptive, able to learn from data and improve over time. By the 2010s, AI was powering everyday technologies like voice assistants, recommendation engines, and even autonomous vehicles. But as AI matured, it became clear that relying solely on centralized cloud computing had its limits, especially for applications that demanded real-time responses, stronger privacy, and lower power consumption.

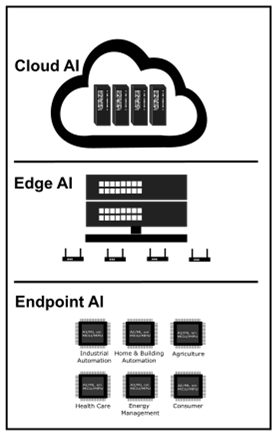

This led to a natural progression:

- Cloud AI centralized intelligence, making powerful tools broadly accessible.

- Edge AI shifted processing closer to the data source, reducing latency and enhancing privacy.

- Endpoint AI pushed ultra-efficient intelligence directly onto tiny devices.

Each phase reflected a growing need for faster, smarter, and more secure responses, driven by lessons learned and the evolving demands of modern technology.

Cloud AI: Highly Capable, Yet Not Flawless

In recent years, Cloud AI platforms have transformed how we interact with artificial intelligence. Some of the most prominent tools include:

- ChatGPT by OpenAI: Makes it simple to generate text, answer questions, write code, and brainstorm ideas.

- Google Gemini: Expands possibilities with multimodal capabilities, understanding and generating across text, images, and more.

- Microsoft Azure AI: Enables developers and enterprises to build, train, and deploy advanced machine learning models at scale.

- Amazon SageMaker: Provides an end-to-end platform for developing, training, and deploying sophisticated ML models.

These platforms excel at content generation, data analysis, customer support automation, and enterprise decision-making. They're also ideal for rapid prototyping, allowing teams to test and iterate quickly.

However, despite their strengths, Cloud AI isn't a one-size-fits-all solution. It depends on a stable internet connection and struggles with real-time responsiveness, making it less suitable for critical tasks like autonomous driving or industrial control. Because data is processed remotely, offline functionality is limited, latency can be an issue, and privacy concerns arise when sensitive information leaves the device. Additionally, Cloud AI isn't optimized for power-constrained environments where energy efficiency is key. These limitations have paved the way for Edge and Endpoint AI, technologies that bring intelligence closer to the source. By processing data locally, they offer faster responses, stronger privacy, and greater autonomy, especially in scenarios where cloud connectivity is impractical or insecure.

Edge AI: Smarter, Faster, and Locally Connected

Edge AI brings intelligence closer to where data is generated, on devices like cameras, vehicles, or industrial machines. Instead of sending everything to the cloud, it processes data locally, either directly on the device or via a nearby server or gateway.

In applications such as autonomous driving, industrial automation, and live video analysis, real-time decision making is critical. Waiting for data to travel to the cloud and back simply isn't fast enough. Edge AI addresses this by enabling instant response right at the source.

It also brings major advantages in privacy and bandwidth. Since data is processed locally, it doesn't need to be transmitted to the cloud, reducing exposure and conserving network resources. Devices can also continue functioning offline, which is essential in remote or unstable environments.

However, not all devices have the power to run complex AI models independently. That's where local servers, often called edge gateways, come in. These act as nearby hubs that handle heavier processing tasks, reducing latency, and allow multiple devices to collaborate and share resources efficiently.

Nevertheless, Edge AI comes with its own set of challenges. Local compute power is limited compared to the cloud, which can restrict the complexity of models. Managing a distributed network of edge devices and servers introduces maintenance complexity, requiring careful coordination and regular updates. Security is another concern; local systems must be well-protected to avoid vulnerabilities. And with data spread across many devices, centralizing insights can be difficult, leading to fragmentation.

Despite these hurdles, Edge AI continues to gain traction because it delivers what the cloud cannot: speed, autonomy, and control, right where it's needed the most.

Endpoint AI: Tiny Devices, Big Intelligence

Endpoint AI takes things a step further by running AI on ultra-small, low-power devices like microcontrollers and embedded systems. These devices don't just collect data, they analyze and act on it instantly.

Key Requirements for Endpoint AI

In many modern applications, from remote industrial sites to edge-based healthcare systems, devices often operate in power-constrained applications, meaning they must function efficiently under limited power conditions where every watt counts. Whether it's a sensor deployed in a harsh climate or a control unit in a mobile system, the challenge remains: deliver smart functionality without draining resources.

To meet these demands, energy efficiency is critical; AI models should consume minimal power while maintaining reliable performance. This requires rethinking design and favoring compact and efficient models, where algorithms run smoothly on devices with limited memory and compute resources.

Robust security is another top priority. Data must be protected locally to ensure privacy and security, especially when dealing with sensitive information in healthcare or industrial operations. Relying on cloud connectivity isn't always feasible or safe.

Finally, reliable performance is also non-negotiable. Devices must function consistently and independently without depending on external networks or cloud services, which may be unavailable or unstable in these settings.

Together, these requirements, power-constrained applications, energy efficiency, compact and efficient models, robust security, and reliable performance, form the foundation for intelligent systems in power-constrained environments, ensuring efficiency, security, and autonomy.

Challenges of Endpoint AI

The shift to Endpoint AI doesn't come without its challenges.

Many endpoint devices operate under severe hardware constraints; they have minimal processing power and memory, making it difficult to run traditional AI models. To overcome this, developers must optimize models by compressing and simplifying them without sacrificing accuracy. It's a delicate balance between performance and efficiency.

Managing these models across millions of devices adds another layer of complexity. Deployment and updates can be logistically demanding, especially when devices are distributed across diverse geographies and environments. Interoperability is also crucial; devices must integrate seamlessly with broader systems and networks, regardless of manufacturer or protocol.

Despite these hurdles, Endpoint AI is rapidly emerging as the next frontier in intelligent computing. It enables real-time decision making directly on the device, eliminating the need to wait for cloud responses. This not only improves speed and responsiveness but also supports scalable, decentralized AI ecosystems where intelligence is distributed rather than centralized.

Most importantly, Endpoint AI enhances privacy and autonomy by keeping data local. Sensitive information remains on the device, reducing exposure and increasing trust, especially in industries like healthcare, manufacturing, and smart infrastructure.

Renesas Electronics Enables Endpoint AI

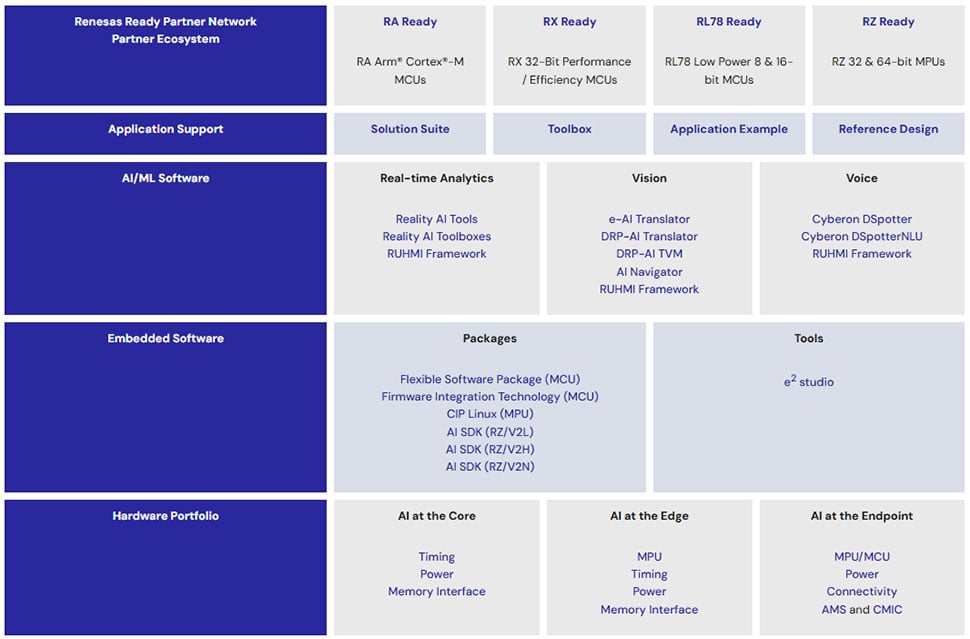

Renesas addresses the challenges of Endpoint AI with a robust, scalable solution stack designed to support intelligent, connected systems from silicon to software.

The Renesas solution stack delivers a complete, scalable environment for building intelligent, connected AIoT systems. Starting with a robust hardware portfolio of MCUs and MPUs, supporting everything from low-power sensing to high-performance edge processing. On this foundation, embedded software, software development kits (SDKs), and tools like the e² studio IDE enable seamless integration. The next layer adds AI/ML software for real-time analytics, vision, and voice, powered by specialized frameworks such as Reality AI Tools® and Robust Unified Heterogeneous Model Integration (RUHMI). Application support accelerates development with curated resources, while the partner ecosystem ensures compatibility and trusted integration, delivering a smooth journey from silicon to intelligence.

Next, we'll explore the processors and software layers that give this solution stack its flexibility and performance for diverse AIoT applications.

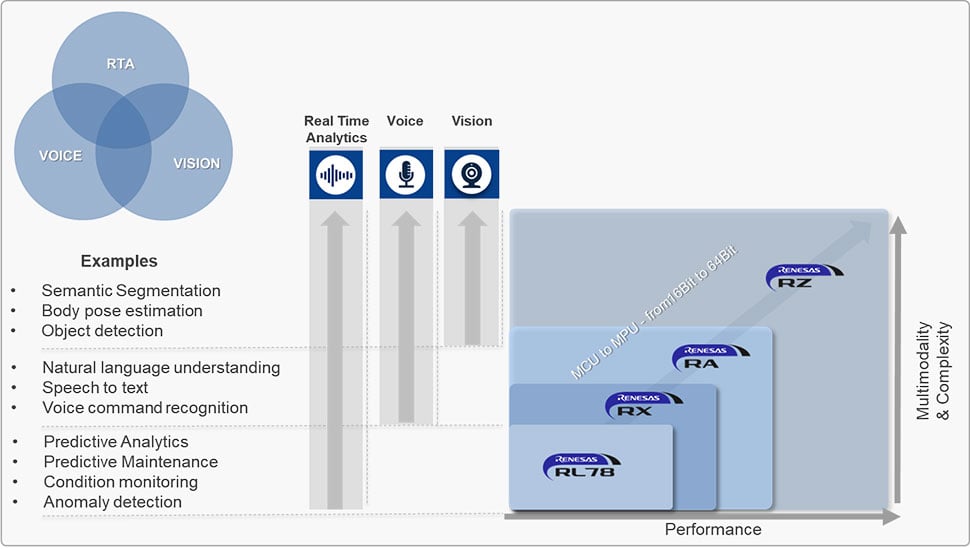

Hardware Lineup – From Ultra-Low Power MCUs to High-Performance MPUs

Renesas offers a scalable portfolio of 16-bit and 32-bit MCUs and 64-bit MPUs tailored to meet the evolving demands of AI/ML workloads across a wide range of applications, from simple sensing to complex multimodal processing.

- RL78: Ultra-low power for simple, single-function AI tasks.

- RX: Efficient performance for basic multimodal AI.

- RA: Higher compute power for advanced voice, vision, and analytics.

- RZ: Maximum processing for complex, real-time multimodal AI.

This progression ensures developers can align compute resources with the specific AI/ML demands of their application, scaling from simple analytics to fully integrated, intelligent edge solutions.

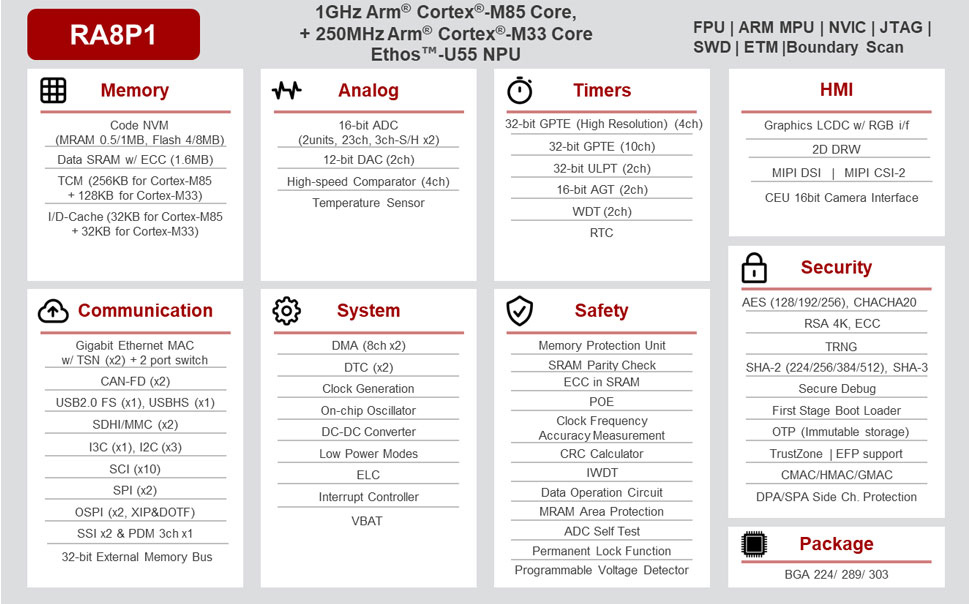

Hardware Featuring Ethos™ - U55 Neural Processing Unit

Renesas's first 32-bit AI-accelerated MCUs, the RA8P1 group, features Arm® Cortex®-M85 cores, large on-chip memory, advanced peripherals, and external memory interfaces, ideal for compute-intensive AI workloads. Available in single- and dual-core variants, it offers scalability and built-in security features with cryptographic IP, immutable storage, and tamper detection. Development is supported by the Flexible Software Package (FSP), RUHMI AI platform, and e² studio for a streamlined workflow.

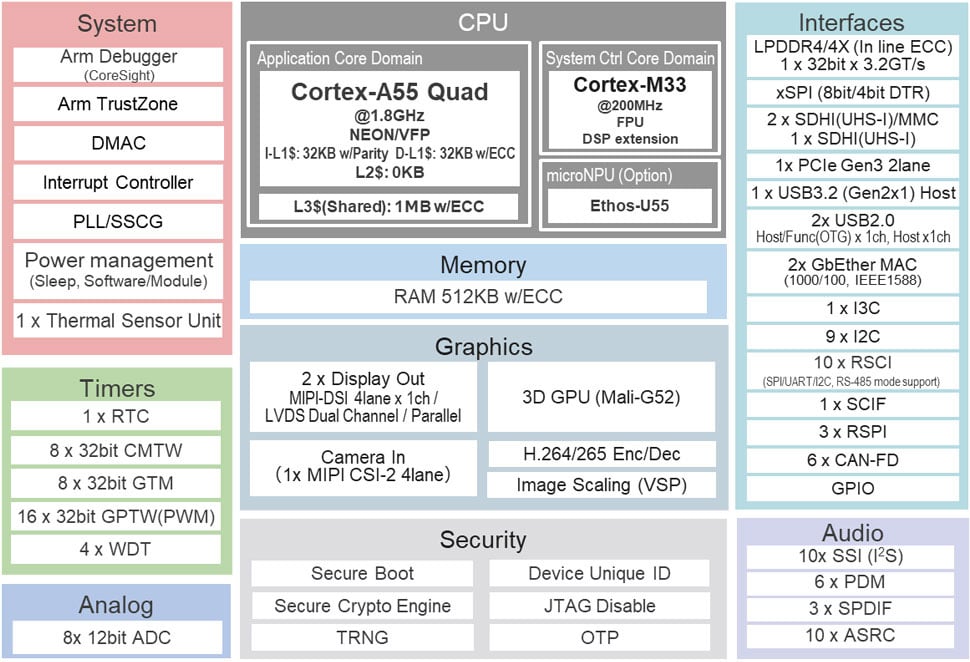

Designed for edge AI, the RZ/G3E microprocessor combines a quad-core Arm Cortex-A55 and a Cortex-M33 with an integrated NPU for advanced AI inference. It supports rich human-machine interface (HMI) applications, with a Mali-G52 GPU, video codecs, and multi-screen full HD output, plus LPDDR4/4X memory and high-speed interfaces like PCIe Gen3 and Gigabit Ethernet. Built-in cryptographic engines and tamper protection ensure secure, high-performance edge computing.

Software & Tools

Accelerate AI innovation, from design to deployment, with Renesas' intelligent tools and frameworks.

Reality AI Tools and Utilities

A streamlined platform for building fast, efficient TinyML and Edge AI models. It leverages advanced signal processing and automated data exploration to help developers identify optimal sensors, placement, and even generate component specifications, all within a unified workflow.

Models are fully explainable in both time and frequency domains, and the platform outputs optimized code for:

- Arm Cortex-M, A, and R

- Renesas' proprietary RX, RL78 cores - This enables confident deployment from data to device. Paired with Reality AI Explorer Tier, developers gain hands-on experimentation, validation, and acceleration across real-world applications.

Reality AI™ Utilities

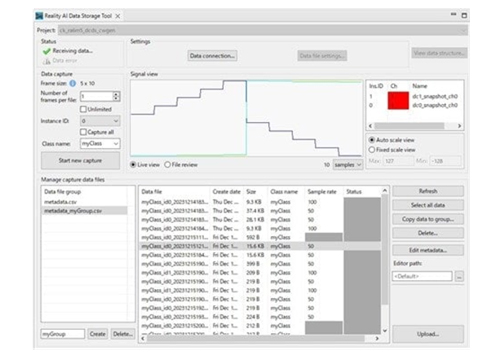

Simplifies the journey from raw sensor data to deployed AI models on edge devices like MCUs and MPUs. Whether using Renesas e² studio or another IDE, it supports a full round-trip AI workflow, from data collection and model training to validation and deployment. Key features include:

- Built-in tools to capture live sensor data from any Renesas-based project

- Direct upload to Reality AI Tools

- Return of optimized AI modules ready to integrate into your code

With FSP/FIT integration, sensor data flows are abstracted, so you can focus on building, not plumbing.

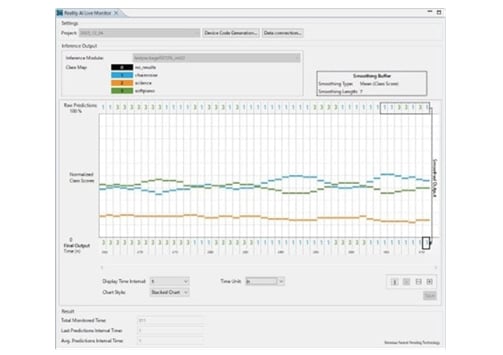

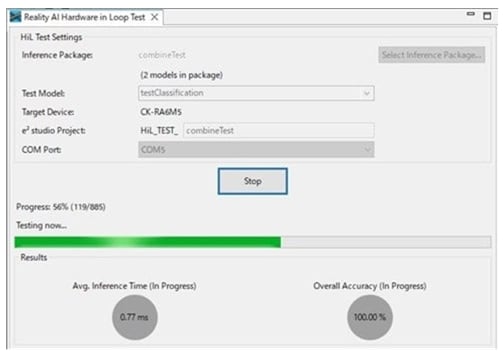

For validation, HiL Testing and the AI Live Monitor provide real-time visibility into model performance on actual hardware, making it easier to debug, tune, and trust your edge AI.

Figure 7. AI Live Monitor

Figure 8. Data Collection Tool

Figure 9. HiL Testing

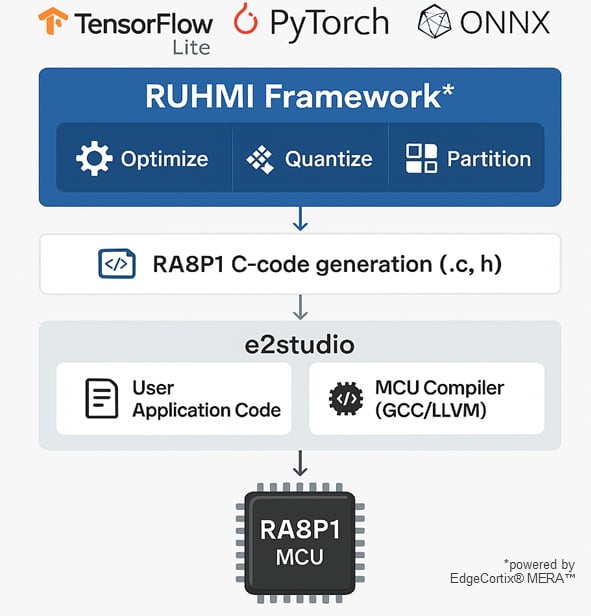

RUHMI Framework (Robust Unified Heterogeneous Model Integration)

The RUHMI Framework simplifies the journey from AI model to embedded deployment, whether you're just starting or optimizing production. Built into Renesas e² studio or available as a standalone tool, RUHMI features a powerful AI compiler that generates highly optimized code for Renesas embedded processors in minutes.

It supports model import from popular frameworks like TensorFlow Lite (TFL), Pytorch, and ONNX, handling post-training calibration, quantization, and conversion automatically. The result: clean, efficient C/C++ code ready for deployment. With Python APIs, CLI and GUI options, and ready-to-use examples, including for the latest RA8P1 MCUs, RUHMI fits seamlessly into your workflow.

Renesas AI Model Deployer (RAIMD): Vision AI Made Developer-Friendly

The Renesas AI Model Deployer brings the power of Vision AI Model Zoo into a clean, intuitive GUI, making it easier for embedded developers to build, train, and deploy vision AI models at the edge.

Running locally on your workstation, it eliminates the need for cloud infrastructure and gives you full control over your workflow. With support for object detection and image classification, you can go from dataset to deployment in just a few clicks. The tool handles project setup, dataset analysis, training (including QAT and pruning), visual evaluation, and hardware deployment.

Live camera inference, USB streaming, and real-time feedback help validate models quickly and confidently. For advanced users, included Jupyter notebooks on GitHub enable deeper customization, like data augmentation, hyperparameter tuning, and Bring Your Own Model (BYOM) workflows.

Development Platforms

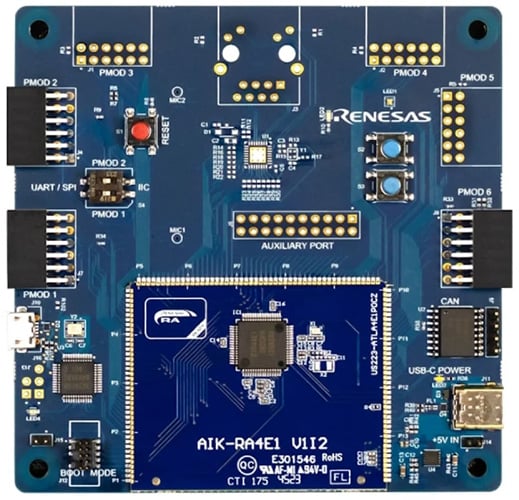

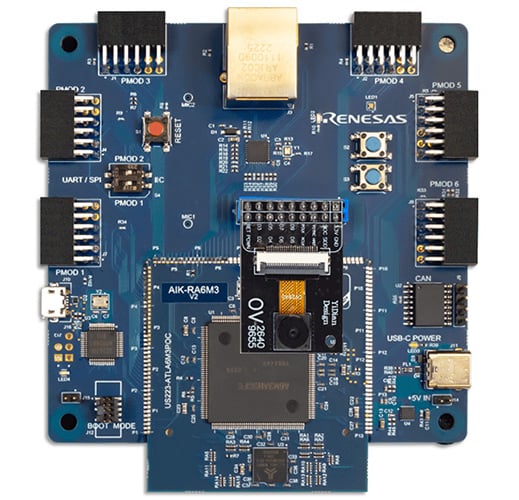

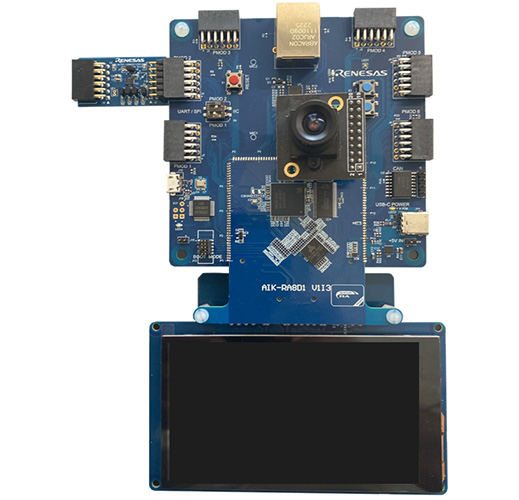

AI Kits - AIK Reference Boards

All AI kits are designed to enable flexible, multimodal AI/ML development across vision, audio, and analytics. They provide a common foundation for rapid prototyping and innovation, regardless of the specific model. Boards like the AIK-RA8D1 support multimodal, including vision, audio, and analytics, and are built for rapid prototyping. More than just development hardware, they serve as launchpads for innovation. With reconfigurable connectivity, developers can easily plug in sensors, swap out components, and reimagine their AI/ML systems without being limited by rigid architectures.

Whether the goal is vision-based recognition, real-time analytics, or audio processing, the AIK-RA8D1 is ready. It integrates seamlessly with onboard analog microphones and a built-in camera, enabling single or multimodal AI/ML solutions with ease.

The real power comes when paired with Reality AI software, enabling practical real-time insights. Supported by the Renesas Ready Partner Network, developers gain access to a rich ecosystem of tools and examples tailored for vision and audio use cases.

From evaluating pre-built applications to crafting new ones, the AI kits are more than a kit, they are a canvas for creativity and a gateway to the future of embedded intelligence.

Solution Suites, Application Examples, and Solution Suite Concepts

To accelerate innovation, Renesas offers Solution Suites, a curated collection of application examples, reference designs, and AI/ML-enabled concepts. These suites combine hardware, software, and pre-integrated intelligence to help developers move from ideas to implementation with confidence.

Whether you're building smart HVAC systems, intelligent edge devices, or next-generation industrial solutions, Renesas Solution Suites provide a head start, so you can focus on creating.

Renesas also offers a rich partner ecosystem, on-demand training, and reference designs to reduce time to market.

AI began in labs, moved to the cloud, accelerated at the edge, and now runs on the tiniest devices around us. Each step has solved real-world challenges, making AI more responsive, private, and accessible. With Renesas leading the way, Endpoint AI is becoming smarter, more scalable, and easier to deploy, bringing intelligence to everything from wearables and robotics to industrial machines and beyond. Discover how Renesas is advancing Edge AI across Realtime Analytics, Voice, and Vision.

Resources

Edge AI Insights: Optimizing Embedded Systems, Tools, & Techniques Video