Have you ever been asked, “Couldn't you use AI to improve the performance of our system?”

Or, “Can you add more value to our equipment by incorporating AI into it?”

Artificial intelligence, deep learning, neural networks... The AI applications are expanding exponentially and there is not a day that goes by without hearing these words. Also, there are many people out there who have the same thoughts like – “I'm interested, but even though I've looked into it a bit, I don't quite understand how to use it. I don't know how to use it in my work, and I can’t imagine what value it will bring in my work.”

In this blog, I would like to introduce AI evaluation using the software package of Renesas' embedded AI processor - RZ/V series.

What is AI?

If you're already familiar with AI and deep learning, this is probably not necessary, but I'd like to start by reviewing some fundamentals about AI first.

Artificial Intelligence (AI). It has been explained in various ways in many books and websites, such as AI can play chess like a pro or turn on the lights in a room just by asking it to do so. But, I would like to focus on the narrowest definition of AI: artificial intelligence that uses neural network technology, specifically – Vision AI – that can perform various recognitions and judgments on images.

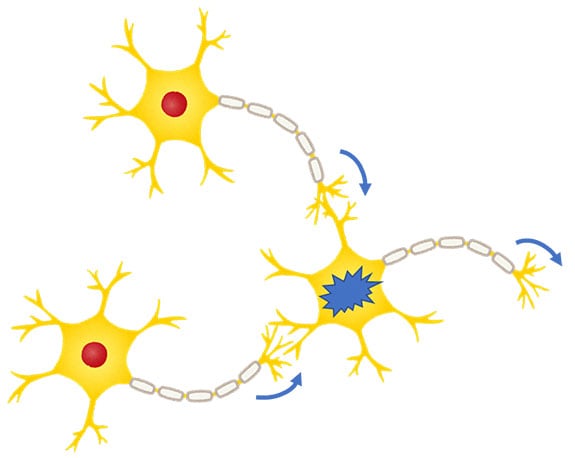

Neural network. As shown in Figure 1, the nerves in the brain of an organism receive inputs from multiple other neurons and transmit them to the next neuron. It is known that memory, recognition and judgment are based on a combination of two types of signals: how strongly the signal from the input side is used (weighting) and how the sum of each input after weighting is transmitted to the output side (activation function).

Figure 1. Connections between Neurons

AI using neural networks imitates this mechanism and performs various recognitions and judgments by using an enormous amount of arithmetic processing, such as weighting multiple inputs, summing them up, and passing the results through an activation function to the next stage.

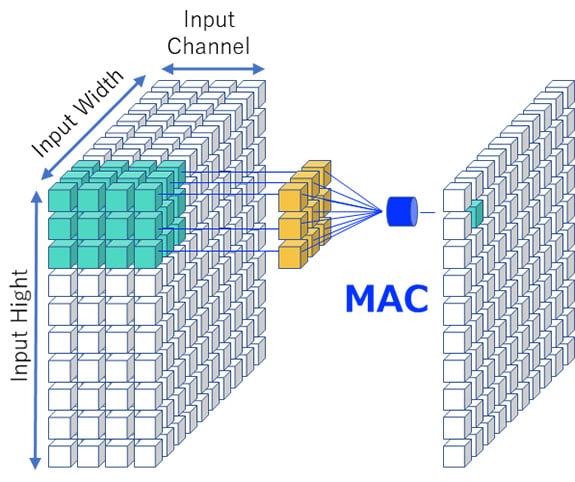

Take as an example, the 3x3 convolutional operation, which is often used in Vision AI to quantify the features of an input image. The image is first subdivided into images of 3 pixels x 3 pixels. Each value (pixel density) is weighted, and the sum of the values is made into an output value using an activation function and sent to the next stage. (Figure 2)

Figure 2. Example of a 3x3 Convolutional Operation by a Neural Network

This process is first carried out over the entire surface of the input image, and then the next image, which was created by the output (so it is not exactly the data that can already be seen as an image, and it is called – feature value which means data that abstracts the features of the input image) is sent to the next neural network where the same process is repeated.

Even simple image recognition such as distinguishing numbers and letters requires several layers of this kind of computation, while general object recognition requires dozens of layers of this kind of computation, so by repeating numerous operations, we can achieve image recognition, such as determining the number 5 to be a 5 or identifying the location of a dog from an image of a dog.

To explain it briefly, the biggest difference is that with conventional computer software without AI - people think about what they want to process (the algorithm) and build a program, - whereas AI that is using neural networks - uses a large amount of input and automatically prepares the internal data (weighting parameters) necessary for the processing. In other words, in AI the lead role is not the program, but the data. Thus, while conventional software development focuses on the programming, the most important and time-consuming part of AI development is the process of preparing the weighting parameters (learning) so that it can process the objects to be recognized and determined with the necessary accuracy and speed.

The software package for AI evaluation that we are introducing here uses pre-trained models provided by AI frameworks such as PyTorch, so you can evaluate the AI's execution of image inference without the time-consuming learning process.

Let’s consider a specific illustration of Vision AI evaluation.

What is required?

There is no need to purchase an evaluation board from the start. In this blog, I will help you experience the Vision AI implementation flow of the embedded AI processor RZ/V series, using only free open source software (hereafter referred to as OSS) and a software package provided free of charge on the web by Renesas Electronics.

*2 TensorFlow, the TensorFlow logo and any related marks are trademarks of Google Inc.

*3 DRP-AI Translator: ONNX conversion tool from Renesas Electronics

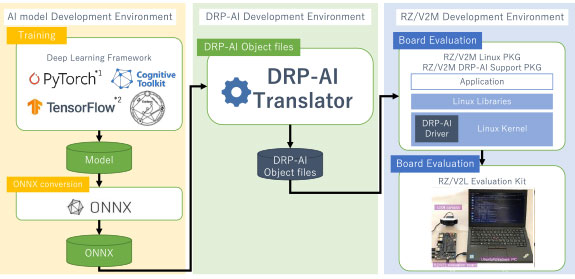

Figure 3. RZ/V2L AI Implementation Tool Flow Overall Structure

Figure 3 shows the overall tool flow. There are various industry standard frameworks for AI learning people are familiar with, so we developed the RZ/V series where “you can use existing AI frameworks that you are familiar with for AI training, and connect the trained neural network models to Renesas tools using a common format called ONNX,” - which is an AI development flow. ONNX is a widely adopted format, and most AI frameworks are able to output in ONNX format, either directly or with a conversion tool, but we will use PyTorch, an AI framework, as our example here.

In the AI world, Linux, not Windows, is the de facto standard, and all OSs and software packages used here also require a PC with Linux (Ubuntu) installed.

There is a technology called WSL2 that runs Linux as a virtual OS on Windows, but the Renesas evaluation package is not guaranteed to work with WSL2, so please prepare a PC with Ubuntu installed. If you don't plan on doing AI learning, you won't need high performance, so you can also reuse an old PC you have on hand.

First, a Linux PC and the DRP-AI Support Package

Getting the Linux PC ready

For the hardware, you can use your own PC with an x86 64-bit CPU and at least 6GB of RAM, but the OS is not Windows. We will instead use Ubuntu version 20.04, one of the Linux distributions.

Go to the web page where Ubuntu 20.04 is available and download the ISO file from ubuntu-20.04.6-desktop-amd64.iso.

You can use the Rufus tool for Windows to convert the ISO file into a bootable USB drive so you can install Ubuntu 20.04 on the PC where you plan on installing Ubuntu.

(Please refer to https://rufus.ie/en/ for details on using Rufus.)

Getting the software ready

To download the software, first, download the software package “RZ/V2L DRP-AI Support Package” from the Renesas website. The file is a large ZIP file that is more than 2GB, so we recommend downloading it over a high-speed internet connection.

When you unzip this ZIP file, open the folder rzv2l_ai-implementation-guide, and there you will find the file

rzv2l_ai-implementation-guide_en_rev5.00.pdf (hereafter referred to as the “Implementation Guide”).

This guide is written in the form of step-by-step practice. If you follow it, you will be able to evaluate the included trained neural network model, from conversion to actual operation on the evaluation board. Next, I would like to introduce some points to be considered when using this guide to proceed with the evaluation.

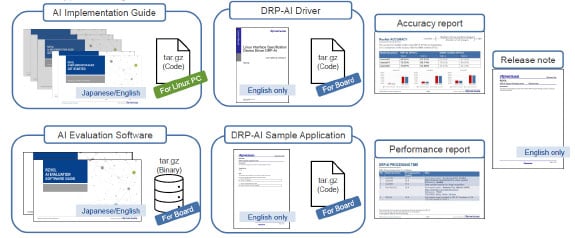

Figure 4. DRP-AI Support Package Details

Creating the ONNX file

From here, we will be using the command line in Linux.

(If you are new to Linux, you will need to learn the basic commands of the OS itself.)

The AI frameworks PyTorch and torchvision can also be installed from the Linux command line using the command pip3. (Refer to the Implementation Guide, Chapter 2.2, Page 26)

Inside the DRP-AI Support Package you downloaded, you'll find the rzv2l_ai-implementation-guide_ver5.00.tar.gz compressed file. Unzip the file as described in the Implementation Guide. (Page 30)

Similarly, in the folder named pytorch_mobilenet, under rzv2l_ai-implementation-guide, you'll find the files

pytorch_mobilenet_en_rev5.00.pdf (hereafter referred to as MobileNet Guide) and pytorch_mobilenet_ver5.00.tar.gz which you should unzip as described in the Implementation Guide.

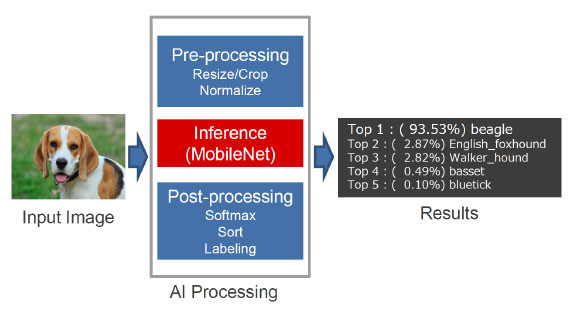

MobileNet is a lightweight and fast neural network for image recognition developed for mobile and embedded devices, which outputs the probability of a correct answer for an object in the image. For example, as shown in Figure 5, the probability that the object is a beagle is 93.53%, indicating that the determination is correct.

Figure 5. Image Recognition Image by MobileNet

If you move on to Chapter 2 of pytorch_mobilenet_en_rev5.00.pdf, you will find a file called mobilenet_v2.onnx. This is a representation of the pre-trained MobileNet v2 neural network model with weighting parameters in ONNX format.

Now that we have the trained ONNX files, let's move on to the next phase of creating DRP-AI Object files for DRP-AI and estimating the performance.

Converting to DRP-AI Object files in DRP-AI Translator

From here, we will use the ONNX conversion tool DRP-AI Translator by Renesas. First, download the DRP-AI Translator from the Renesas website.

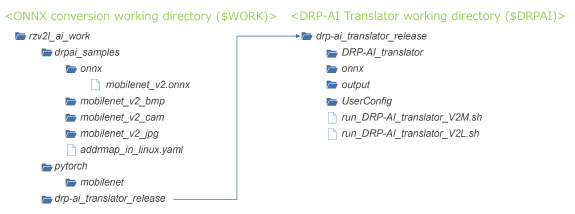

Install the unzipped installer according to Chapter 3.1 of the Implementation Guide. If you follow the steps in the Implementation Guide and MobileNet Guide, you should end up with a directory structure like the one in Figure 6.

Figure 6. Directory Structure of the ONNX Files and the DRP-AI Translator Directory Structure

After this, prepare the file to be input to the DRP-AI Translator by copying/renaming and editing the file from the sample. Please refer to the MobileNet Guide, Chapters 3.3–3.5 for detailed instructions.

When you are ready, the ONNX translation itself can be completed with a single command. For RZ/V2L, from the shell, run

$ ./run_DRP-AI_translator_V2L.sh mobilenet_v2 -onnx ./onnx/mobilenet_v2.onnx

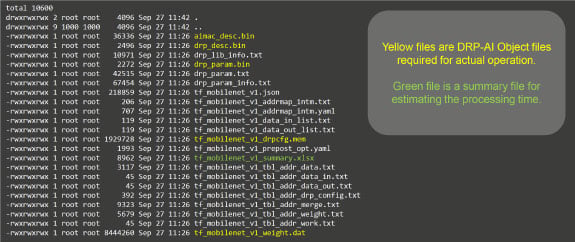

in the output/mobilenet_v2/ directory under the working directory, and this will generate the binary files needed to run MobileNet on a real chip. (Figure 7)

Figure 7. List of Files after Running DRP-AI Translator

How to read the performance estimator Excel

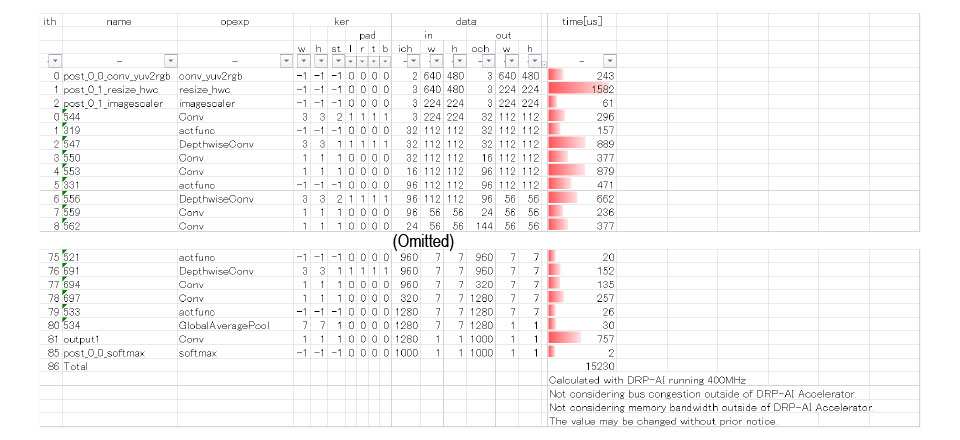

The DRP-AI Translator outputs the summary of the neural network model, which was converted from ONNX to an Excel format, in addition to the objects required for the evaluation of the actual device. (Figure 8)

Along with the structural information of the neural network model, this Excel file contains the approximate processing time for each layer (each function) of the model (DRP-AI stand-alone performance excluding the limitations of the LSI internal bus and external DRAM bandwidth), which allows you to estimate the approximate performance.

Figure 8. Example of mobilenet_v2_summary.xlsx

Summary

We have introduced the free software package provided by Renesas Electronics for users who are interested in AI but don't know where to start, so you can try it. If you have the object files created in this blog, you are one step closer to evaluating AI performance using a real chip. The evaluation board kit for the RZ/V2L used in this blog is available for purchase, and you can experience the AI performance, low power consumption and low heat generation of the actual chip embedded in the evaluation board kit.

The RZ/V series of Renesas embedded AI processors also includes RZ/V2M, which has approximately 1.5 times higher AI performance than the RZ/V2L introduced here, and a RZ/V2M DRP-AI Support Package is available that can be used in the same way as mentioned above.