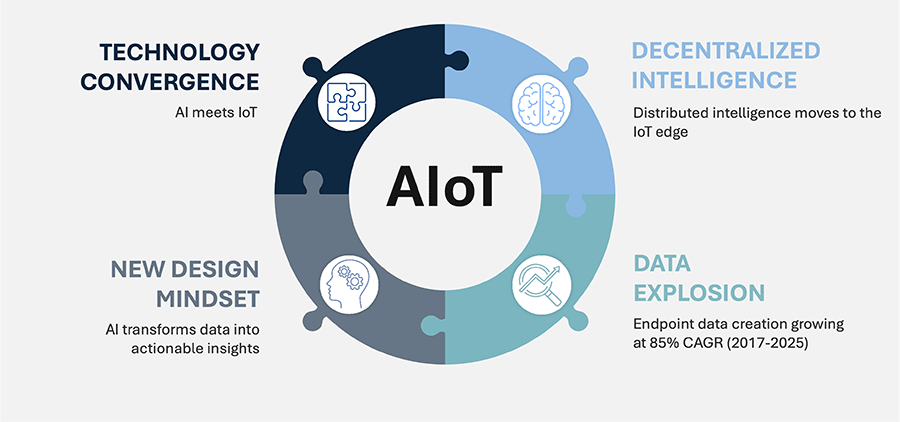

Artificial intelligence at the IoT edge is redefining how connected devices capture, process, and analyze data to render actionable outcomes in a variety of consumer and industrial applications. Unlike AI cloud servers, where power, data latency, and security management are prime design considerations, AIoT moves intelligence closer to the data source to enable real-time, in-situ decision-making with enhanced privacy and lower energy use.

Despite its promise, AI at the IoT edge carries significant engineering challenges. Traditional AI models are computationally intensive. They require large amounts of memory and power, which resource-constrained IoT devices, often battery-operated with limited processing capacity, cannot easily support. Instead, designers need highly optimized, lightweight neural network models that run efficiently on microcontrollers, microprocessors, and other low-power hardware without sacrificing performance or accuracy.

Managing AIoT Processing with TinyML Models

Because it is inherently decentralized, AIoT reduces dependency on cloud servers while instantly acting upon real-time analytics and boosting security by keeping data local. This makes the process of outfitting factory equipment with predictive maintenance easier by embedding machine learning (ML) models within local sensors to detect anomalies or faults without waiting for cloud analysis. Smart home devices with AI-enhanced voice interfaces can perform instant keyword recognition and natural language understanding without sending sensitive audio data over the network.

Similar to a trend underway in AI data centers, AIoT at the edge is also evolving to handle the proliferation of inference modeling. If data is the fuel for intelligent, real-time decision making, then AI inference is the engine that processes pre-trained ML models directly on edge devices.

Data center AI inference modeling has a unique set of computational requirements best served by powerful parallel processors that can train large language models (LLMs) models that may have billions of parameters. On the other end of the spectrum, edge AIoT technologies like TinyML minimize memory requirements and computing overhead, making real-time analytics feasible for battery-powered IoT endpoints. Moreover, TinyML inference modeling enables multi-modal applications, combining voice, vision, and sensor data for advanced use cases like environmental monitoring and autonomous navigation.

Real-time data processing is another function complicated by the memory limitations, modest energy budgets, and thermal constraints of edge AIoT. Many consumer and industrial applications, such as smart home voice recognition and autonomous sensors, demand ultra-low latency responses. Cloud-based AI struggles to meet these requirements due to network delays, making on-device inference essential. Engineers must also ensure data security and privacy by embedding strong encryption and root-of-trust mechanisms directly at the endpoint.

Tools like TinyML are critical for overcoming these barriers and enabling compact machine learning models that operate efficiently on IoT hardware while extending battery life.

Renesas Optimizes New MCUs and MPUs for Edge AIoT

To better serve edge AIoT applications, Renesas recently expanded its processor portfolio, introducing new high-performance, low-power MCUs and MPUs with integrated neural processing units (NPUs) purpose-built for AI computing.

The 32-bit Renesas RA8P1 MCU is designed for voice and vision edge AI applications and features dual Arm® cores, the 1GHz Cortex®-M85 and 250MHz Cortex-M33, and an Arm Ethos™-U55 NPU that delivers up to 256GOPS of AI performance. For security, the new MCU supports the Arm TrustZone® secure execution environment, hardware root-of-trust, secure boot, and advanced cryptographic engines, ensuring safe deployment in critical edge applications.

Renesas also introduced the 64-bit RZ/G3E MPU for high-performance edge AIoT and human machine interfaces, combining a quad-core Arm Cortex-A55 CPU, Cortex-M33, and advanced graphics. The RZ/G3E embeds an Arm Ethos-U55 NPU to offload the main CPU by delivering up to 512GOPS of AI performance for image classification, voice recognition, and anomaly detection.

Arm NPUs Right-Size Power and Performance for AIoT Applications

The Arm Ethos-U55 NPU supports popular neural network models like ResNet, DS-CNN, and MobileNet with up to 35x faster inference compared to CPU-only processing. Unlike GPUs that burn tens to hundreds of watts on high-throughput, parallel computing, the Ethos-U55 delivers hardware-accelerated inference at milliwatt-level power, making it ideal for IoT edge devices.

The Arm NPU supports compressed and quantized neural networks, reducing memory and compute overhead to allow for real-time, localized AI processing. In contrast, GPUs excel at training large models but are impractical for edge deployments due to size, cost, and energy use.

Integrated RUHMI Framework and e² studio Streamline AI Edge Development

The new MCU and MPU are both supported by the Renesas e² studio integrated development environment and incorporate Renesas' RUHMI Framework to accelerate edge AIoT design. RUHMI (Robust Unified Heterogeneous Model Integration) is an end-to-end toolset and Renesas' first comprehensive MCU/MPU framework for simplifying AI workloads on resource-constrained devices. RUHMI supports leading ML formats like TensorFlow™ Lite, PyTorch®, and ONNX, enabling developers to import and optimize pre-trained models for high-performance, low-power edge AI deployments.

The RUHMI framework is enhanced by Renesas' e² studio, which provides intuitive tools, sample applications, and debugging features. When used together, they help developers more easily handle pre-processing of image and audio data, execute inference on the NPU, and post-process results within a unified environment.

Edge AIoT Relies on Processors with Low Power and High Compute Density

Grand View Research reports that the global edge AI market recorded sales of more than $20 billion in 2024, on its way to nearly $66.5 billion by 2030, driven by demand for real-time data processing and analysis at the network edge.

Increasingly, MCUs and MPUs are the preferred choice for edge AIoT vision and voice applications due to low power consumption, localized processing, and cost efficiency. Unlike GPUs, which require cloud connectivity and high power, MCUs and MPUs can process data directly at the endpoint, enabling real-time inference and decision-making without network delays. By keeping sensitive data on-device, these processors also enhance security and privacy, eliminating the need for constant cloud communication.

This combination of speed, energy efficiency, and data security makes MCUs and MPUs ideal for wearables, smart homes, and industrial edge AI systems.

Future Efforts Will Prioritize HD Vision, Security, and a Robust IoT Supply Chain

As we right-size support for our processor ecosystem using highly efficient TinyML models, Renesas is also developing MPUs for Vision Transformer (ViT) networks. This form of deep learning applies Transformer models originally designed for natural language processing to computer vision, but unlike power-hungry GPUs, ViTs process high-resolution images and videos without the need for cooling fans.

Renesas is also creating zero-touch security solutions such as post-quantum cryptography (PQC), which secures against attacks from both classic and quantum computers to better defend against a widening range of cyber threats.

As we foster AI-accelerated hardware, software, and tool chain development, Renesas remains committed to supporting legacy (non-AI) products and the open-source software environment that powers much of today's IoT systems. By collaborating with our partner ecosystem to keep abreast of the rapidly changing IoT landscape, we can better help our customers design sustainable, smart, secure, and connected systems safely and reliably.