Developing AI models for embedded processors presents several unique challenges. Unlike cloud-based AI, where large models can leverage massive datasets and server-grade processing, edge AI demands the highest fidelity of data to ensure accuracy within tight resource constraints. With limited memory, compute power, and model size, the quality of your input data directly impacts performance, making efficient data collection, real-time monitoring, and rapid on-device testing essential for success.

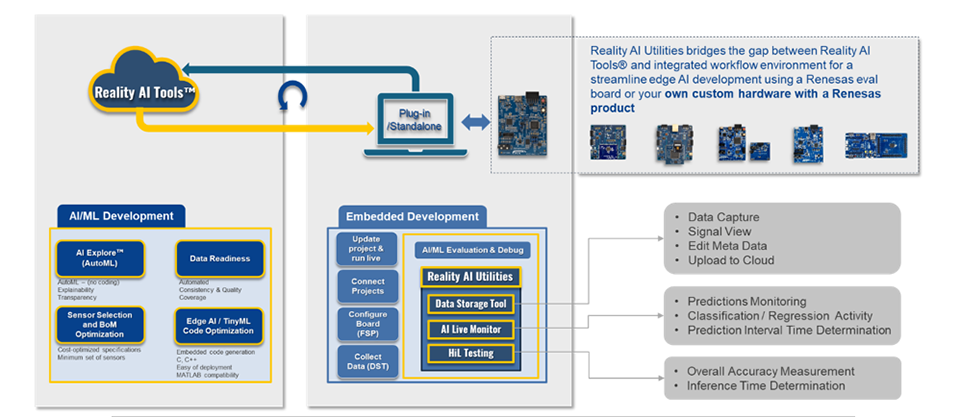

To meet these demands, Renesas provides Reality AI™ Utilities, a suite of powerful tools that enable end-to-end edge AI development. These utilities are available as plug-ins for e² studio and can also be used as standalone tools in other popular IDEs like CS+, Keil, and IAR, offering flexibility no matter your development environment. Reality AI Utilities consist of the following:

- Data Storage Tool – Captures sensor and microphone signals from your hardware and uploads them to the Reality AI Tools® cloud platform for high-fidelity signal analysis and AI model development.

- AI Live Monitor – Visualizes real-time inference on the device, including raw class scores and post-processing logic, helping developers debug and optimize models with instant feedback.

- Hardware-in-the-Loop (HIL) Testing – Enables batch testing of AI models directly on Renesas hardware using downloaded test datasets, allowing fast and accurate validation under real-world conditions.

Whether you're working in e² studio or another supported IDE, these tools help accelerate edge AI development by improving accuracy, speeding up testing, and simplifying the path from prototype to deployment. In this blog, we'll explore each utility and how it contributes to building robust edge AI systems with high fidelity from start to finish.

How do Reality AI Utilities streamline the process?

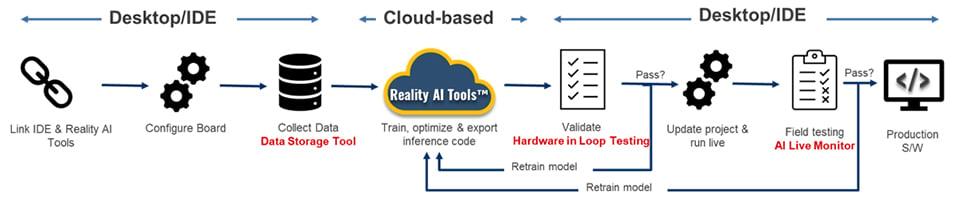

Typical embedded AI project development follows a workflow of:

- Writing firmware to collect data.

- Pre-processing the data to fit the file format – to be used by AI tools.

- Develop and optimize the model.

- Validate the model on a device by writing firmware to calculate accuracy/inference speed.

- Integrate the models into the embedded project workflow to do field testing.

Reality AI Utilities simplifies this by automating key steps from above. Here is an end-to-end workflow diagram:

The first step in the workflow is to retrieve your API key from the Reality AI Tools® cloud platform and link it with the Reality AI Utilities plug-ins, either within e² studio or using the standalone application. Once connected, you can seamlessly transfer data between your local environment and the cloud, whether working with both tools side by side or switching between them. Next, set up your embedded project using e² studio or the Renesas Smart Configurator to create your local workspace for data collection and for running AI inference modules directly on your hardware.

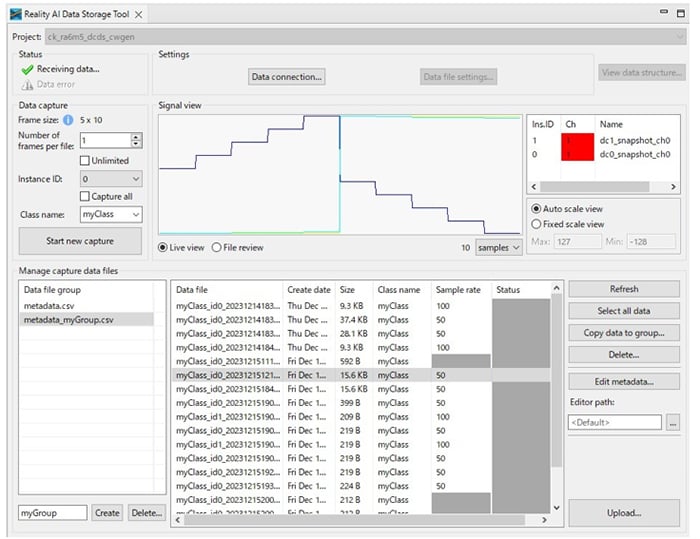

With the project in place, you can begin leveraging Reality AI Utilities, starting with the Data Storage Tool (DST). DST provides a simple yet powerful interface for capturing sensor or channel data, labeling it, and uploading it to your linked Reality AI Tools® project. The tool takes care of formatting and label consistency, allowing developers to focus on gathering the right kind and amount of data for their use case.

Under the hood, Renesas provides lightweight middleware called Data Collector/Data Shipper (DC/DS). These modules abstract your data flow, making it easy to collect training data and route it to your AI inference modules without rewriting code. Best of all, DC/DS works on any Renesas-based hardware, including custom boards. As long as you have UART or USB connectivity, you're not limited to using evaluation kits.

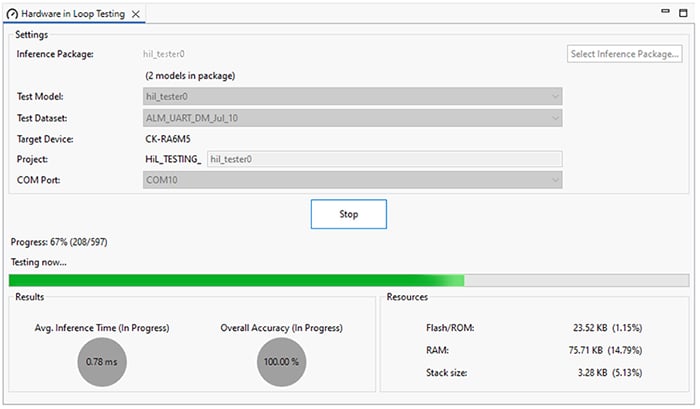

During model development, Reality AI Tools provides ongoing estimates for model accuracy, size, and complexity. However, because different compiler toolchains apply a variety of low-level optimizations, true model performance, such as inference time and memory usage, can only be accurately verified when the model is deployed on actual hardware.

To address this, Reality AI Utilities includes a powerful Hardware-in-the-Loop (HiL) testing feature. This tool connects directly to the user's Reality AI Tools account, automatically downloads the selected model and associated dataset, and integrates them into a test project within the Reality AI Utilities "VIEW" environment.

With just a single click, no coding required, the system builds the project, runs the model on your connected Renesas hardware, and reports real-world metrics such as inference time, model accuracy on a real dataset, and model memory requirements.

If your model delivers acceptable results, the next step is field testing—and that's where Reality AI Utilities shines with Live Monitor. Built on the same lightweight DC/DS middleware, AI Live Monitor enables real-time, in-field testing of your developed models. It offers powerful features such as probability scores, giving engineers clear insights into the model's confidence level, and smoothing, a post-processing technique that enhances robustness by factoring in not just the current prediction but also previous data trends. All of this is accessible through a single, intuitive GUI, no manual coding required. These capabilities empower engineers to confidently move forward with production deployment or to return to training with refined parameters and additional data if needed.

Saving development time while ensuring model accuracy and correctness during any project is a high priority. This becomes even more important when dealing with embedded AI systems due to the inherent complexity of hardware, data collection, sensor integration, etc. But automating and simplifying the project workflow, Reality AI Utilities enables customers to accelerate the development of their target solutions with the highest fidelity of data while minimizing model size to the limit.

We encourage you to explore the tools to further enhance your development experience.