Why Edge AI?

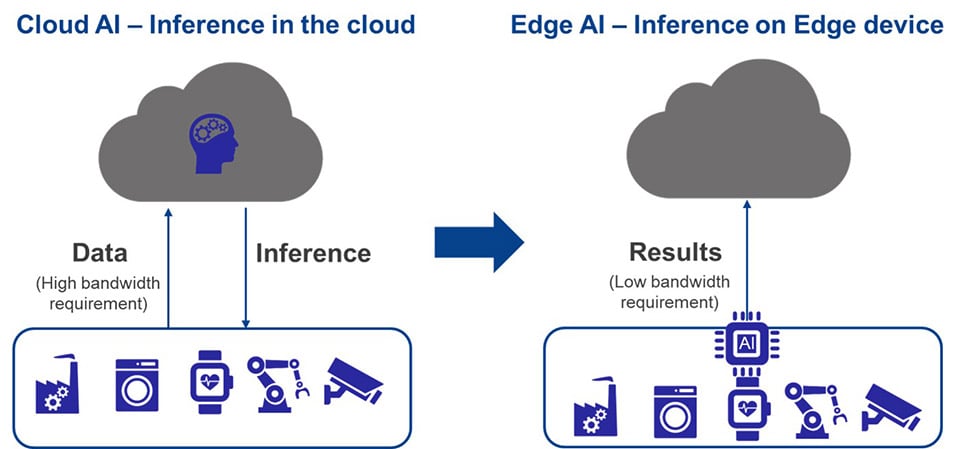

There has been a paradigm shift in the AI market. Previously, AI processing was done mainly in the cloud. Endpoint devices gathered data from sensors and sent it to the cloud for inference processing and decision making and results were sent back to the endpoint devices. This approach required huge bandwidth for transmission of massive data to the cloud. International Data Corporation (IDC) estimates that 79.4ZB of data will be sent from IoT devices to the cloud in 2025.

There is an increasing movement towards AI inference at the Edge devices that enables fast real-time responses and increased data privacy and security, while avoiding the latency and costs associated with the cloud connection. This also lowers power consumption, making it suitable for battery-powered IoT applications. AI at the edge, therefore, provides advantages of autonomy, lower latency, lower power, lower costs with lower bandwidth to cloud and higher security, that make it attractive for new and emerging applications.

MCUs are increasingly being used for Edge AI. They provide better real-time response, lower power consumption, lower cost as compared to an MPU and a fully integrated solution that simplifies product design and lowers development and BoM costs, making them ideal for low-power and cost sensitive applications. High-performance MCUs with integrated hardware accelerators are now available that can handle linear algebra operations such as dot products and fast, parallel matrix multiplications, convolutions, and transpositions, required for neural network processing. Smaller neural network models, software libraries, and ecosystem solutions optimized for resource constrained MCUs are also available.

Build Power Efficient AI Applications with RA8P1 AI-Accelerated MCU

The RA8P1 MCUs are Renesas' first AI-accelerated single and dual-core MCUs, delivering magnitudes larger uplift in AI/ML, DSP, and scalar performance and lower power consumption, with the high-performance Arm® Cortex®-M85 and Cortex-M33 CPU cores with the Arm Ethos™-U55 neural network processor (NPU), that is ideal for Edge AI and IoT applications. Built on the advanced TSMC 22nm ultra-low leakage (22ULL) process, RA8P1 MCUs deliver an unprecedented 7300+ CoreMark raw performance, 256GOPS of AI performance and address the need for lower power consumption in Edge AI applications.

Together with large memory and a rich peripheral set, these devices enable demanding Voice, Vision AI and Real-Time Analytics applications directly on the device itself. Dual-core RA8P1 MCUs enable high processing power, efficient task partitioning between the two cores, and improved real-time performance. In addition, advanced security, immutable memory, and TrustZone® are built in to enable truly secure AI applications.

The Ethos-U55 NPU embedded in the RA8P1, is a dedicated processor optimized to execute core operations of neural network models, such as matrix multiplications and convolutions, more efficiently and with lower power consumption than the CPU core. The Ethos-U55 is optimized for lower precision arithmetic (8-bit integer) used in AI models, which reduces the complexity, memory usage, and power consumption without reducing the inference accuracy.

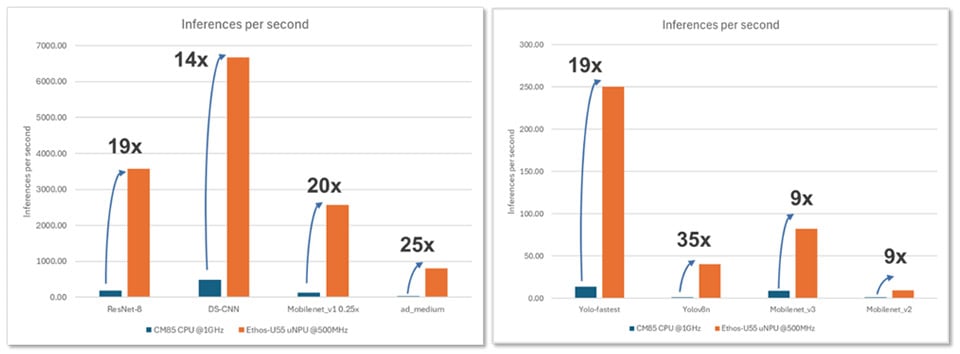

Renesas has successfully demonstrated this performance uplift with RA8P1 MCUs using Ethos-U55 for inference processing, with a few AI/ML use cases, showing a significant performance uplift with the Ethos-U55 NPU as compared to the CPU core.

Models Used:

- Image Classification – ResNet8, MobileNet v2, MobileNet v3

- Keyword Spotting – DS-CNN

- Visual Wake Words – MobileNet v1

- Object Detection – Yolo_fastest, Yolov8N

- Anomaly Detection – ad_medium

Enable Faster Application Development with RUHMI Framework

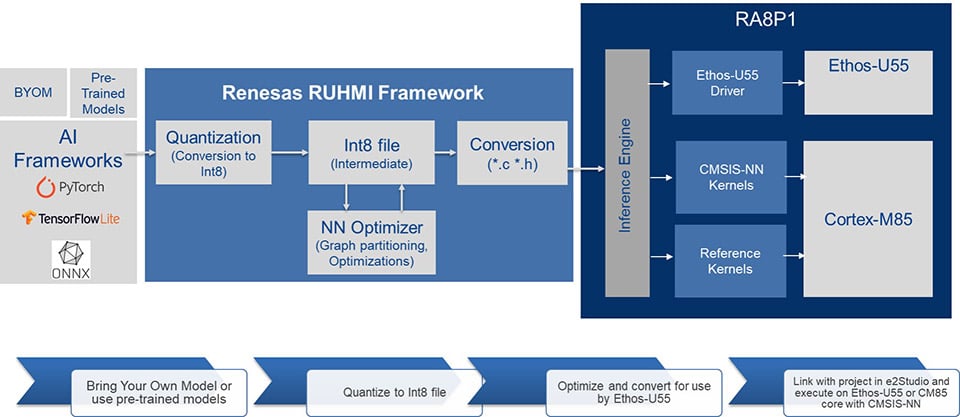

The RA8P1 AI solution features a highly configurable and optimized Robust Unified Heterogenous Model Integration (RUHMI) Framework, that provides AI developers with all the tools necessary for faster and more efficient AI development. This is Renesas' first comprehensive AI framework for MCUs and MPUs and is integrated into the e2 studio IDE to generate and deploy highly optimized neural network models in a framework agnostic manner. RUHMI enables model optimization, quantization, graph compilation, and conversion to an MCU friendly format. Native support for commonly used ML frameworks TensorFlow Lite, Pytorch, and ONNX is included, as are ready to use application examples and models optimized for RA8P1.

Typical AI Workflow with RUHMI Framework:

- Model Optimization and Compilation (Offline) – A pre-trained AI model is input via commonly used frameworks such as Tensorflow Lite, Pytorch, or ONNX. Using the RUHMI optimization and conversion tools, the model is first quantized to an INT8 intermediate format and optimized. This process involves graph partitioning, separating operators between NPU and CPU, and compilation to an MCU friendly format (typically *.c/*.h).

- Data Input and Pre-processing – Raw input data (image from a camera, audio from a microphone) is captured by the RA8P1 MCU and pre-processed by the high-performance Cortex-M85 core, for input to the AI model.

- Execution on the NPU – The CPU core then sends the pre-processed input data and the compiled AI model's command stream to the Ethos-U55 NPU for execution. The NPU reads the command stream and using the input data and model weights (typically stored in local memory), processes each layer of the neural network.

- Output and Post-processing – Once the NPU has processed all layers of the neural network, it outputs the inference results back to the main CPU, which can then perform any necessary post-processing and action.

AI Applications Enabled by RA8P1

The RA8P1 MCU with its high inference performance, low-power consumption, and real-time processing capability is ideal for a wide range of AI applications across various market segments. Here are some key applications enabled by RA8P1:

- Voice AI – Keyword spotting, voice recognition, speech recognition, noise reduction, and speaker identification

- Vision AI – Object detection, image classification, gesture recognition, face recognition, image analysis, and driver/vehicle monitoring

- Real-time Analytics – Anomaly detection, vibration analysis, and predictive maintenance

- Multimodal Applications – Smart HMI with voice and vision capability, enhanced surveillance cameras using voice and vision to detect events, and robotics with visual and auditory inputs for environment sensing and interaction

In the next section, let's see how RA8P1 can help to simplify the AI implementations with two application examples.

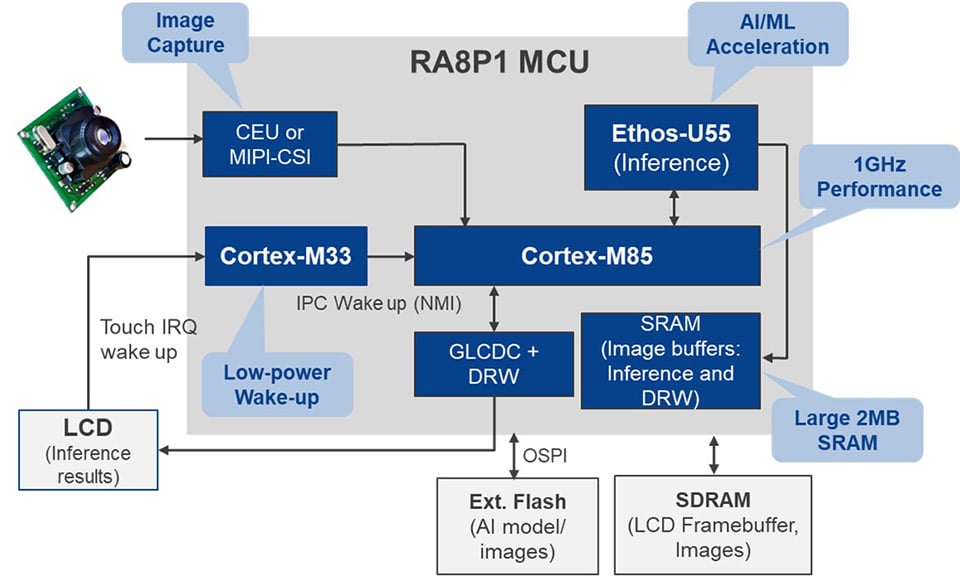

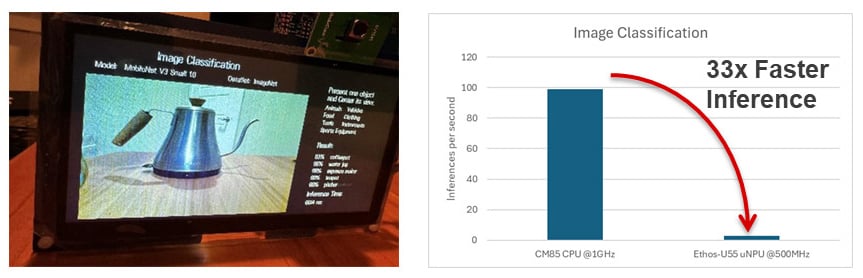

Application Example 1: Image Classification on RA8P1

The figure above shows an image classification application implementation. The RA8P1 integrates the CPU cores, the NPU, memory, and peripherals needed to build this vision AI application, all on a single chip. The application analyzes an input image and assigns it a pre-assigned label or category. The neural network model is trained on a vast dataset of images (where each image is labeled with a category) and deployed on the RA8P1 MCU. For inferencing, a new input image is fed into the model and goes through the layers of the trained network. The output layer then provides the probabilistic distribution across all categories and the category with the highest probability is assigned as the image's label. This output data (image label and accuracy) can then be sent to the display or cloud. In our implementation, we see a 33x improvement in inference speed with the Ethos-U55 in comparison with using the CPU core.

Image classification can be used in diverse applications:

- Security – Identifying weapons, people recognition, and anomaly detection

- Retail – Creating product catalogs by category, and inventory management

- Agriculture – Identifying crop disease and classification of plants

- Smart Cities – Identifying traffic lights/signs and pedestrians

- Smart Appliances – Identifying objects inside a refrigerator

Application Example 2: Driver Monitoring System on RA8P1

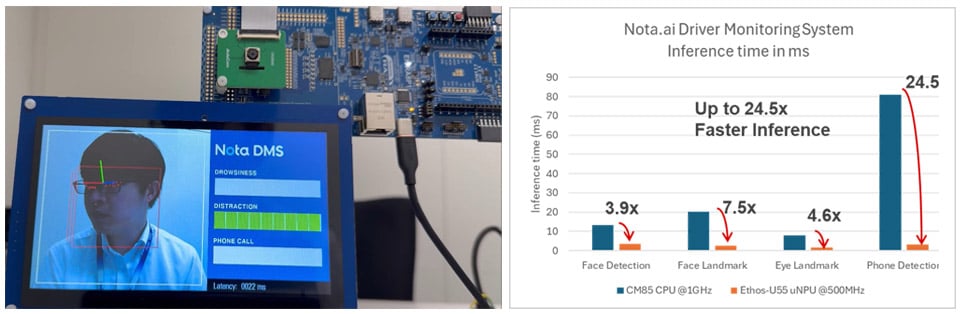

This application shows the Nota-AI Driver Monitoring System (DMS) which is an in-cabin safety solution to enhance road safety for all aspects of vehicle travel. Using RA8P1, the Nota-AI DMS detects unregistered drivers, driver drowsiness, cell phone usage, and driver distractions like smoking.

With the higher performance of the RA8P1, we see a 4x-24x increase in inference performance for the four models being used in this application - face detection, face landmark, eye landmark, and phone detection.

The DMS finds applications in dashboard cameras, vehicle traveling data recorders, and driver monitoring systems.

Both these Vision AI applications make optimal use of the resources on the RA8P1 MCU:

- Efficient input image acquisition through the image sensor

- The RA8P1 includes a dedicated MIPI CSI-2 interface with an image scaling unit or the 16-bit CEU parallel camera interface for capturing raw image input data.

- High performance inference processing with the Ethos-U55 NPU

- The Ethos-U55 AI accelerator on the RA8P1 MCU offloads the CPU core and processes complex AI models more efficiently and with lower power consumption than the CPU core. It receives processed images from the MIPI CSI-2 or parallel CEU.

- A pre-trained AI model (e.g., an image classification model like MobileNetv1), is optimized for the RA8P1 using RUHMI tools and is loaded onto the NPU.

- The Ethos-U55 NPU performs the actual AI inference at very high speeds (up to 256GOPS) and with high power efficiency.

- Faster application processing with Arm Cortex-M85 and Cortex-M33

- The high-performance 1GHz Cortex-M85 core with Arm Helium vector extensions can be used for pre- and post-processing of the input images or audio data and the inference results. Operators not supported by the Ethos-U55 can also be executed by the Cortex-M85 core in fallback mode, accelerated by CMSIS-NN libraries. It is also used for executing the application code.

- The 250MHz Cortex-M33 core can be used for low power wake-up and housekeeping tasks.

- Efficient storage of images, model weight, and activations with on-chip memory and memory interfaces

- The on-chip large 1MB MRAM and 2MB of SRAM are crucial for storing AI model weights, images, and intermediate activations. Integrated embedded MRAM provides advantages over flash such as faster writes and higher endurance and retention.

- The MCUs also supports high-throughput external memory interfaces (OSPI with XIP and Decryption on the fly and 32-bit SDRAM) for larger models.

- Advanced Graphics peripherals for LCD panels

- The Graphics LCD Controller (with parallel RGB or MIPI DSI interfaces) and 2D engine can be used for processing and rendering the images and inference results to the LCD display.

- Flexible Connectivity options

- Several connectivity options exist to transmit inference results, images, or alerts/notifications either to local devices or to the cloud, for storage or analysis.

Edge AI applications benefit greatly from the use of AI accelerated MCUs. They enable applications where real-time performance, low power, and security are critical concerns. The addition of the NPU to low-power MCUs has been a transformational change in the AI solutions landscape. The new RA8P1 MCUs drastically reduce latency, enable data privacy, and minimize power consumption, making them ideal for battery-powered applications. The entire development is supported by Renesas' comprehensive RUHMI framework, which helps developers optimize and deploy their AI models efficiently on the RA8P1 hardware.

For more information, visit www.renesas.com/ra8p1