Edge AI is no longer a futuristic idea—it's an essential technology driving today's smart devices across industries, from industrial automation to consumer IoT applications. But building AI applications at the edge still comes with challenges: complexity with AI model development, hardware constraints, and long development cycles.

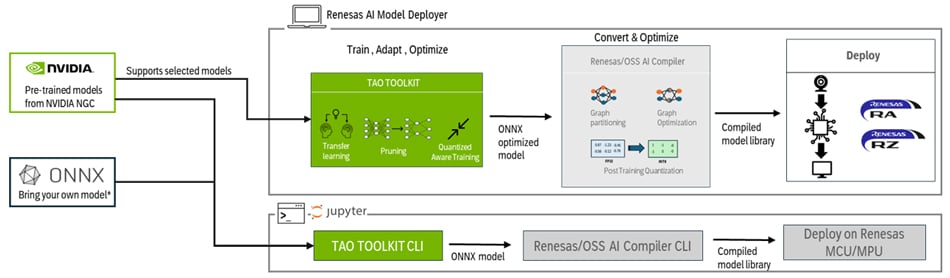

By integrating NVIDIA TAO into our intuitive graphical user interface (GUI) for our RZ/V MPUs and RA8 MCUs, Renesas is removing traditional barriers for embedded developers. Whether you're just starting your AI journey or looking to optimize your edge deployment, this approach offers a streamlined, scalable path forward.

The Renesas AI Model Deployer is designed to run locally on standard workstations, making it convenient for developers to prototype and test without cloud-based infrastructure.

And for developers who want to go beyond the basics? Detailed Jupyter notebooks are provided, allowing users to explore deeper levels of customization, integration, and optimization. This way, users can either get started quickly or tailor their own solutions with full control.

What is the NVIDIA TAO and Why It Matters

NVIDIA TAO, short for Train, Adapt, and Optimize, is a low-code AI development suite that dramatically simplifies building deep learning models for vision AI applications. Built on top of TensorFlow and PyTorch, TAO simplifies the Vision AI development with a few simple APIs.

Rather than starting from scratch, users can select from a library of over 100 pre-trained models, including models or object detection, classification, segmentation, and more. TAO supports critical AI development features like:

- Transfer Learning: Fine-tune large models on your own datasets, drastically reducing the amount of data and time required.

- Pruning: TAO offers pruning strategies to either improve model accuracy by removing redundant weights or shrink model size for faster inference on edge devices.

- Quantization-Aware Training (QAT): Prepare models for INT8 quantization during training, ensuring performance-optimized deployment on edge hardware.

- Export to ONNX Format: Easily move trained models across frameworks and devices.

Importantly, TAO makes these powerful capabilities accessible through a low-code environment, minimizing the need for expertise in AI frameworks or machine learning theory.

Yet even with low-code tools, embedded developers often face real-world hurdles: setting up frameworks, ensuring compatibility between model formats and hardware, and fine-tuning models without AI expertise.

Renesas AI Model Deployer

This is where the Renesas AI Model Deployer truly shines—bridging those gaps by offering:

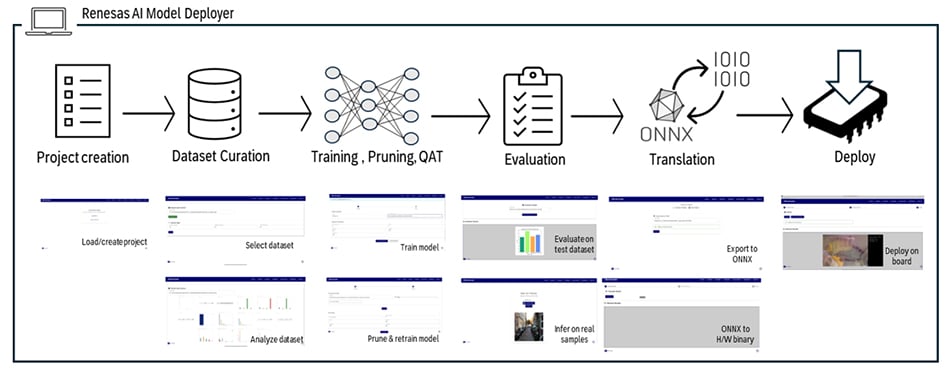

- Guided workflows for project creation, training, evaluation, and deployment.

- Pre-integrated toolchains that eliminate setup headaches and avoid library mismatches.

- Hardware-aware optimizations to ensure models are ready for edge deployment.

In short, the GUI helps to:

- Lower the learning curve for embedded teams without deep AI knowledge.

- Accelerate time to proof-of-concept by packaging best practices into a simple flow.

- Improve deployment reliability by guaranteeing hardware compatibility at every step.

The Renesas AI Model Deployer is a practical, end-to-end tool designed specifically for embedded developers who need an efficient way to manage vision AI workflows. Users can set up the environment quickly by running just two shell scripts, and the GUI offers a complete end-to-end AI development pipeline: project creation (model, board, and task selection), dataset split and analysis, model training and optimization (QAT and pruning), visual evaluation (mAP or Top-K accuracy), sample-based inference testing, and streamlined deployment to hardware.

The GUI also supports live camera inference, USB streaming, and intuitive deployment screens, giving developers immediate feedback and confidence that their models are functioning in real-world scenarios. By wrapping cutting-edge AI techniques into a click-driven experience, we empower customers to build smarter, more efficient edge products faster, easier, and with significantly reduced risk.

For those looking to create production-grade systems, the provided Jupyter notebooks open up further flexibility. Developers can extend pipelines with custom data curation workflows, explore additional TAO features such as sophisticated augmentation strategies and advanced hyperparameter tuning, or even implement Bring Your Own Model (BYOM) approaches. These notebooks turn the foundation provided by the GUI into a fully customizable toolkit ready for real-world applications.

Technical Integration Examples

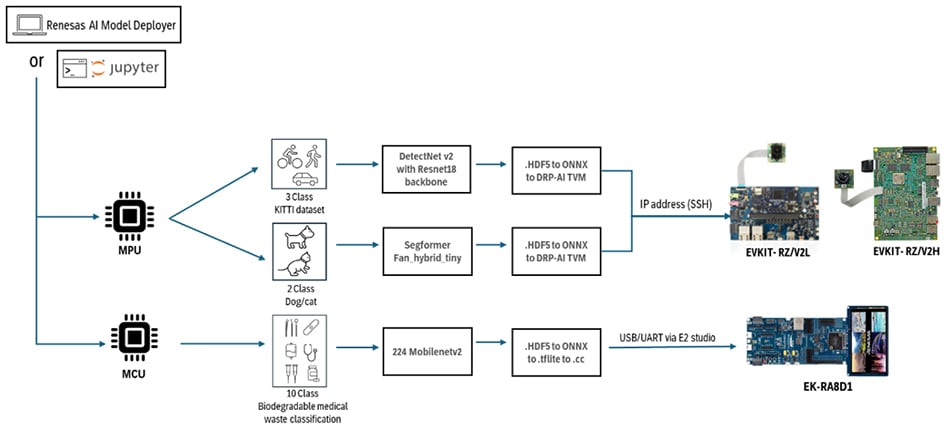

The Renesas AI Model Deployer currently supports several hands-on integration examples that demonstrate the flexibility and scalability of the toolchain across a range of devices and AI use cases. These examples serve to highlight how the Renesas AI Model Deployer streamlines model integration, optimization, and deployment, from MPUs to MCUs, making real-world AI implementation more accessible and production-ready.

Object Detection with DetectNet v2 on RZ/V2H and RZ/V2L MPUs

- Model: DetectNet v2 with ResNet-18 backbone

- Dataset: KITTI dataset (cars, pedestrians, cyclists)

- Deployment: Quantized models deployed through Dynamically Reconfigurable Processor for AI (DRP-AI), featuring live camera inference and bounding box visualization.

Image Classification with SegFormer-FAN on RZ/V2 Series MPUs

- Model: SegFormer-FAN hybrid Vision Transformer

- Dataset: Cat and dog classification dataset (proof-of-concept to demonstrate hybrid vision transformer performance on MPUs)

- Deployment: End-to-end workflow leveraging PyTorch training, ONNX export, and DRP-AI quantization.

Image Classification with MobileNetV2 on RA8D1 MCU

- Model: MobileNetV2

- Dataset: Biodegradable medical waste classification (10 classes, e.g., syringes, gloves, pipettes)

- Deployment: Quantization via TFLite and deployment through e2 studio.

In addition to these ready-to-use examples, the included Jupyter notebooks allow developers to experiment beyond the basics. Examples include how to integrate additional datasets, advanced model retraining, and even leveraging Bring Your Own Model flows for fully customized solutions.

Conclusion

Our integration of the NVIDIA TAO into a local, GUI-driven environment lowers the barriers for embedded developers to adopt Vision AI. With pre-built workflows, compatibility with powerful edge processors, and the flexibility to scale through Jupyter notebooks, developers can move faster from prototype to deployment.

Whether you are creating a smart industrial camera, an AI-powered sensor, or a next-generation IoT device, the integration suite of tools provided by Renesas has you covered.

To get started with AI Model Deployer powered by NVIDIA TAO on Renesas boards, visit the Renesas AI Model Deployer for Vision AI page to learn more about the full offering, and explore our GitHub page for the latest codes and examples from the landing page.