Realized High Precision Recognition with AI!

Tried the Performance of the Gesture Recognition UI Development Tool

There are an increasing number of cases where gestures are adopted as the UI (User Interface) of embedded devices so that the devices can be operated "without touching". The development hurdles for adopting this gesture UI are not low. It often happens that the reaction is not good with a hand other than the person who developed it, who does not recognize the expected movement of the hand.

The QE for Capacitive Touch, a development support tool for capacitive touch sensors, can launch high-precision gesture software using AI (deep learning) in a short time of about 30 minutes. We explain the mechanism of this tool, the design of gestures, the feeling of operation and the recognition accuracy of completed gestures while using the tool.

The following items are explained in order.

- Mechanism

- Gesture Design

- Development of gestures < Part 1 - Creation of AI learning data >

- Development of gestures < Part 2 - AI learning, C-source integration >

- Development of gestures < Part 3 - Monitoring >

- Recognition accuracy of the completed gestures

Mechanism

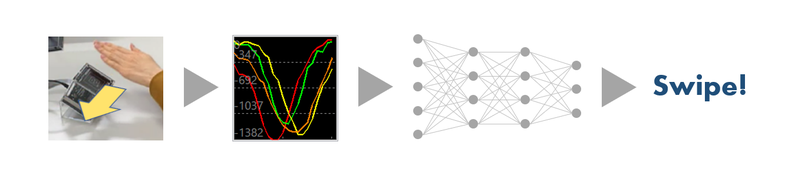

Gesture software created by the tool utilizes deep learning technology. By letting AI learn the value of capacitance that changes by bringing your hand close to multiple electrodes (sensors), you will be able to distinguish gestures such as “Swipe” and “Tap”. In addition to realizing a mechanism that allows this ultra-small AI to be easily incorporated into a device, e-AI performs AI processing that is completely closed inside the endpoint device, achieving safe and high-speed reaction.

Gesture Design

Since it is a mechanism to judge the type of gesture from the shape of the signal waveform of the sensor, theoretically any gesture can be distinguished as long as the shape of the waveform is different.

As examples of product application include selecting items by "Left and Right Swipe" operation, confirming by "Tap" operation, and returning by "Return" operation that moves the hand 90 degrees from top to left. In addition, since it is judged that one gesture is from when the hand is brought close to the sensor until it is separated, it is necessary to take the hand off the sensor once before making the next gesture.

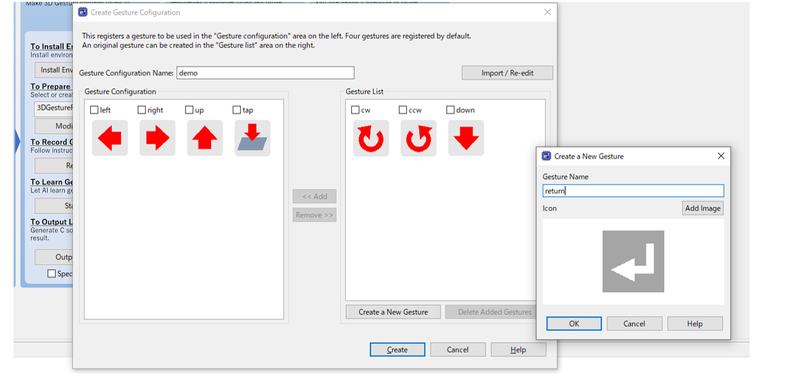

As an example of a non-contact UI, let's create four types of gestures: "Left Swipe", "Right Swipe", "Up Swipe", and "Tap".

Displays the dialog to edit the gesture configuration from the "Edit Gesture Configuration" item of the QE for Capacitive Touch workflow. Select “left”, “right”, “up”, and “tap” from the presets, and remove the "down". If you want to create your own gesture (e.g. "return") here, you can register a new one by clicking the "Create New Gesture" button. Name it “return” and so on. You can also register the created image files as an icon. The registered icon is only used to determine gestures in the tool and is not related to the program, so it is recommended that you register an image that is easy for users to understand.

Development of gestures < Part 1 - Creation of AI learning data >

Actually create a gesture AI.

In order to develop high-precision (correct answer probability) AI, it is necessary to learn a sufficient amount of learning data set with a neural network with optimal parameters. The QE for Capacitive Touch is very easy because it automates all the steps required for those trials and errors.

Open the dedicated dialog from the "Register gesture" item of the QE for Capacitive Touch, and first register the AI learning data from "Left swipe". When you connect the emulator and press the [Start Gesture Registration] button, the waveform acquisition program will automatically run on the evaluation board. All you have to do is make a gesture (left swipe) that actually moves your hand from right to left on the sensor.

The accuracy of AI with deep learning generally depends on the quality of the learning data. A small number of learning data or registration of incorrect data does not lead to a good result.

For example, when developing an AI that distinguishes "cat" pictures, we use a large number of cat pictures to learn, but if there are only 10 pictures of cats and there are cases where "dog" pictures are mixed, I can imagine not getting a good result.

As explained in the dialog, this tool recommends 50 or more data per gesture. However, since I just moved my hand, I was able to register 50 items in no time. If there is a "Return" that makes the waveform a little complicated, it is recommended to increase the number of registrations a little and register about 80 each.

The QE for Capacitive Touch has data import and export functions, so multiple developers can acquire the data and combine it later.

Acquiring data in this way tends to be a good gesture that responds to anyone's hand movements, as the gesture waveform does not overly adapt to the movement of a particular individual's hand. In addition, even if each sensor cannot be evenly arranged due to the shape of the electrode or chassis, learning data using the signal waveform unique to the chassis can be created, so AI suitable for the product to be developed can be developed. In addition, even if each sensor cannot be evenly arranged due to the shape of the electrode or chassis, learning data using the signal waveform unique to the chassis can be created, so AI suitable for the product to be developed can be developed.

Development of gestures < Part 2 - AI learning, C-source integration >

AI learning is fully automated. Just press the "Start" button and wait a little. After pre-processing such as automatically expanding (increasing) the learning data, AI learning proceeds.

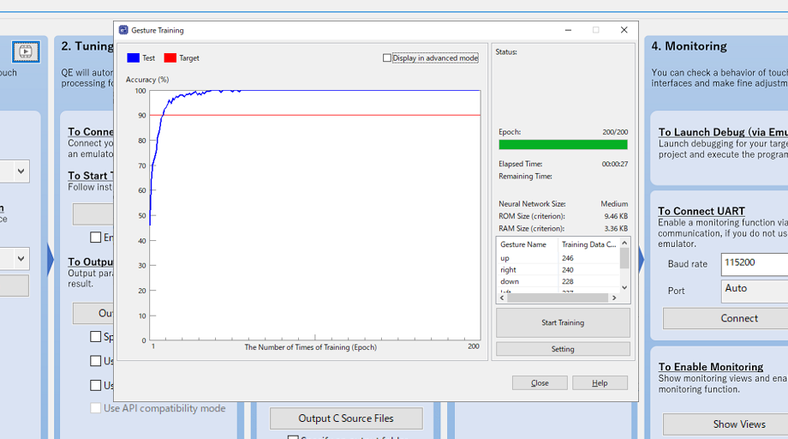

The blue line in the graph shows the accuracy of the AI during learning. You can see that the correct answer rate increases as the learning progresses. If you wait about 5 minutes and the graph is above the target red line, you can determine that there is no problem with accuracy. If it doesn't cross the line well here, review the learning data. The points are to increase the data, delete the wrong data, and so on.

There are some setting items in this learning dialog, but first of all, we will use the defaults.

The only problem is choosing the size of the AI model (neural network), but this time I will leave the default and set it to "Medium" size. Basically, the accuracy of AI and the ROM / RAM size are in a trade-off relationship, so if it fits in the microcomputer to be used, it is better to select a larger size one for higher accuracy.

Once you've learned, it's time to incorporate it into your microcontroller.

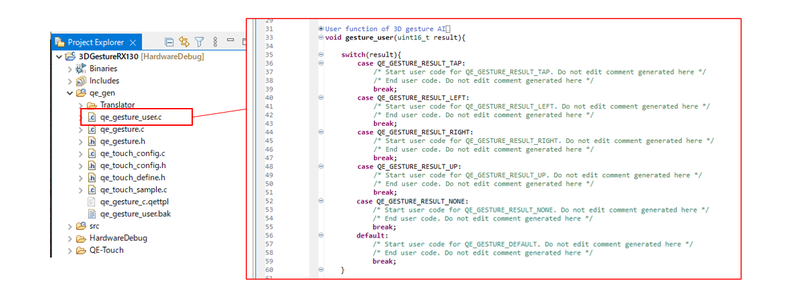

irst, press the "Generate C Source" button in the workflow of QE for Capacitive Touch. The process of converting the learning result to C source runs automatically, and the following source files are registered in the project.

A source file that describes the processing after qe_gesture_user.c recognizes the gesture. If you open this file and look at the code, you can see that the switch statements are lined up for each gesture name you registered, and that it is configured only by writing the processing of each final gesture here after the gesture AI is completed. In addition, if you write code to call this gesture process from the main function of the project, the integration is complete.

Development of gestures < Part 3 - Monitoring >

Let's move the developed gesture AI immediately.

Open a dedicated view from "Start Monitoring" in the QE for Capacitive Touch workflow, connect to the emulator and run the program. Press the Enable Monitoring button at the top right of the monitoring view for gestures to start drawing the sensor waveform.

Let's try the gestures learned by AI now.

As you can see from this video, you can see that it is recognized fairly well. Gestures judged by AI are displayed as icons, and the possibility (probability) of that gesture is shown in the "Score" column. While moving your hand, you can adjust the threshold value of how many scores (probabilities) you want to recognize as a gesture, and parameters such as how short or long gesture you want to recognize.

There is also a tuning method for AI. Gestures for which the gesture judgment score does not reach the threshold value are displayed with a "-" hyphen icon. It may also be judged by the wrong gesture. In that case, you can select the waveform from "History" in the monitoring view and add it to the learning data of the correct gesture type at the touch of a button. If it doesn't work as expected, use this function to tune the AI.

Recognition accuracy of the completed gestures

The accuracy is high and the inference is in real time

We've been working on it for about 30 minutes along the workflow.

If you try the gesture software you've created, you'll see that it's running at a high accuracy that doesn't have any practical problems, and that it's running at a speed that you don't feel particularly slow inference. There is no particular operation that require AI expertise, so any embedded engineer can develop it.

Actually, there are few devices in the city that can be operated by gestures, but I think that needs will expand further in the future along with non-contact UI such as voice recognition. For engineers who are thinking about what they can do, I would like you to try this QE for Capacitive Touch and the evaluation board for 3D gestures once.

Try different gestures

I've found that simple gestures such as swipes work well, so I'll try some more complicated gestures. Gestures are a single rotation of the hand, an edge swipe that gently moves the hand inward from the edge of the sensor, and letters such as "1" and "2".

The results are summarized in the table below. Also, it depends greatly on training data and hand movements during monitoring, so please see it as a reference value.

| Recognition accuracy (%) | |||||

|---|---|---|---|---|---|

| AI model | Swipe Up,down,left,right :4directions |

Diagonal swipe 8directions including diagonal |

Edge swipe 4directions |

Rotate Clockwise / counterclockwise :1turn |

Numbers Draw 1,2,3,4 with finger |

| Large | 100 | 96.9 | 100 | 86.7 | 100 |

| Medium | 93.8 | 87.5 | 85 | 66.7 | 75 |

| Small | 90.6 | 75 | 85 | 50 | 55 |

You can see that relatively simple gestures such as swiping are accurate enough for AI models of any size. In comparison, relatively complex gestures such as rotation can be seen to have different accuracy depending on the size of the AI model used. Theoretically, it is not always a good idea to use a large AI model because the amount of calculation at the time of inference is larger when using a large AI model. Try moving it in the "monitoring view" to see if it works as intended.

Usage Environment

- IDE:e² studio V2022-01

- QE Tool:QE for Capacitive Touch V3.1.0

- CTSU FIT module (r_ctsu_qe) 2.00

- QE Touch FIT module (rm_touch_qe) 2.00

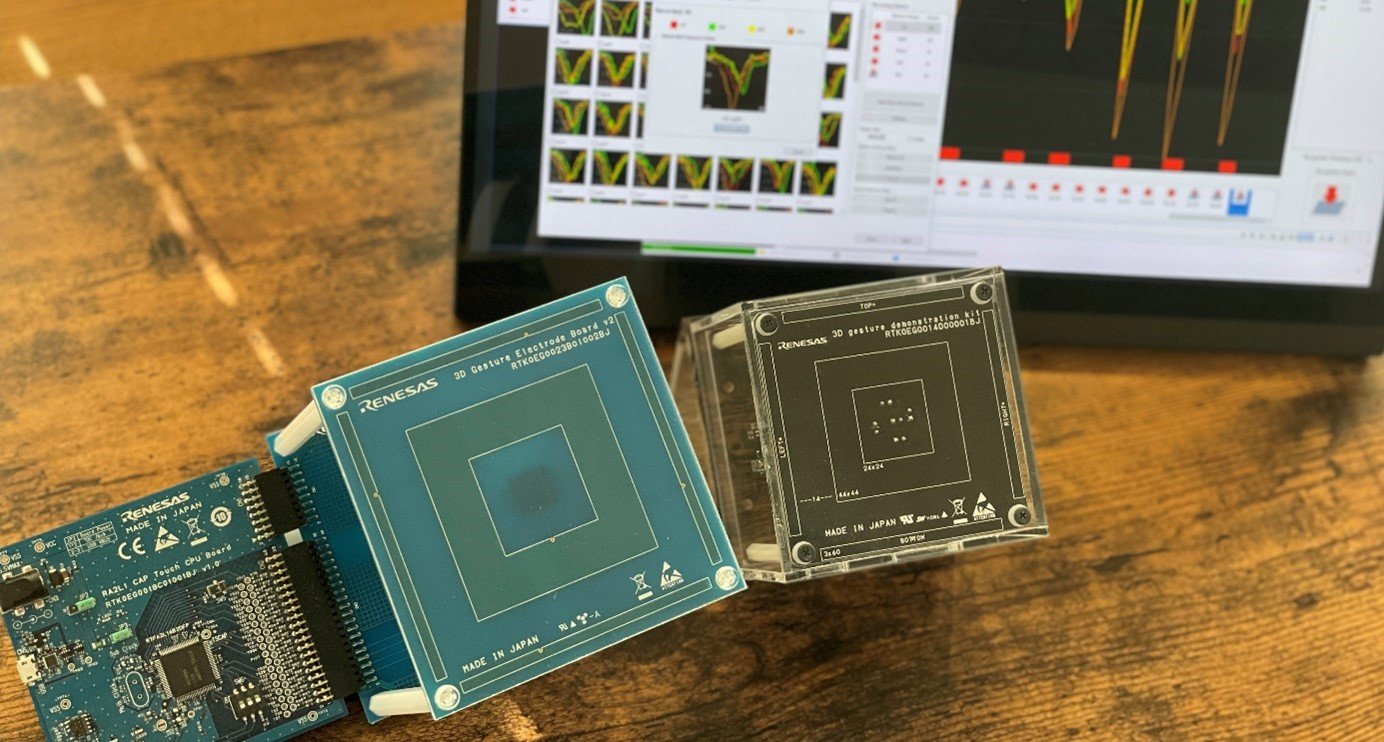

- CPU Board:RX130 CapTouch CPU Board – RTK0EG0004C01001BJ

*The above microcomputer is used, but all microcomputers equipped with Touch IP CTSU / CTSU2 are supported.

(RX, RA and RL78 Families, Renesas SynergyTM Platform) - Sensor Board:3D Gesture Electrode Board - RTK0EG0023B

- Emulator:E2Lite (And user interface cable for E2 emulator)

- Compiler:CC-RX V3.03.00

Related Documentation & Downloads

The 3D gesture recognition feature of QE for Capacitive Touch is an extended facility of the e² studio integrated development environment from Renesas.

This feature is realized in combination with the touch-free user interface by using Renesas' capacitive touch key solution and the e-AI solution.

For more details and downloading the software, see the following product information pages.