In the previous blog of the Renesas Functional Safety Blog Series1, we discussed applying the ISO 26262 standard to SEooC software. In ISO 26262, another important software related topic is in Part 8, clause 11: Confidence in the use of software tools. With the emerging application of advanced driver assistance system (ADAS) and autonomous driving (AD) vehicles, artificial intelligence (AI) and machine learning (ML) techniques are increasingly applied in the automotive industry. The AI/ML technology is used for object detection, sensor fusion, path planning and so forth. The AI/ML development is heavily reliant on software tools – model training tools, compilers, simulators, and so on. The ADAS and AD use cases often converge to safety goals, meaning that the tools used to support the development of these applications shall be evaluated and/or qualified according to ISO 26262 requirements. In this blog, we will discuss the uniqueness of AI/ML tools when considering safety, give an overview of specific AI/ML tools and how they are contributing to ADAS/AD development, and explore the challenges in ensuring confidence in the use of AI/ML software tools in a safety context.

In ISO 26262:2018 part 8 clause 112, requirements and recommendations are given to increase confidence in the use of software tools in a safety context. The two requirements that are most relevant to software tool vendors are:

- 11.4.5 Evaluation of a software tool by analysis

- 11.4.6 Qualification of a software tool

Clause 11.4.5 requires the TCL (Tool Confidence Level) to be determined based on TI (Tool Impact) and TD (Tool error Detection). Clause 11.4.6 requires the qualification of a software tool to be achieved by various methods depending on the tool TCL and target ASIL of the safety goal.

These requirements apply to all software tools which are “incorporated for the development of a system, or its hardware or software elements” as explained in clause 11.4.1, including the AI/ML tools. Compared to other generic software tools used during development, the uniqueness of AI/ML tools is as follows:

- A considerable number of AI/ML tools being open-source tools makes the tool qualification difficult. The early development and progress of AI/ML occurred mainly in statistics, economics, and mathematical applications3. These consumer industry developments rely heavily on open-source software and tools. The difficulty with using open-source tools in the automotive industry is obtaining the required qualification and confidence in the use of the tools.

- Safety awareness needs to be improved among AI/ML teams. AI/ML techniques are still relatively new to the automotive industry. According to FutureBridge Analysis and Insights, automotive is considered one of the “New Adopter” industries when it comes to using AI, while ICT (Information and Communication Technology), Financial Services, Healthcare, and a few other industries are considered “Matured” or “Aspirational” Industries4. Therefore, applying the existing automotive standard to this new technology is still a trial phase. The awareness in ensuring safety still needs to be improved when using the new AI/ML technology.

It’s worth mentioning that there are more and more automotive standards available or under development regarding AI/ML and safety, such as UL 4600 “Safety standard for the evaluation of autonomous products”6 (published in 2020), ISO/IEC AWI TR 5469 “Artificial intelligence —Functional safety and AI systems”7 (under development), and so forth. - The complexity of AI/ML models results in a high TD (lower degree of confidence that a malfunction and its corresponding erroneous output will be prevented or detected4) being selected. In some cases of traditional applications, the TD can be deemed as low (e.g. TD1 or TD2) if there is a high degree of confidence that a malfunction and its corresponding erroneous output will be prevented or detected5 by the standard verifications within the product safety lifecycle. For example, if a code generator is malfunctioning, there is medium confidence that it will be captured because the code would very likely not be able to compile. However, due to the complexity of the AI/ML models, covering complete verification of the tool output by standard verification would result in a more difficult product safety lifecycle. For example, if a CNN network training tool will generate a CNN model that classifies traffic lights vs other objects, it’s almost impossible to judge whether the model is correct by examining the model, or by verifying how the model is working in an application. Therefore, a higher TD shall be selected (e.g. TD3).

Before discussing tool confidence, let’s look at how AI/ML technologies are used in today’s automotive products, specific AI/ML-related tools, and how the tools are contributing to automotive product development.

One example of an application dependent on AI/ML technology could be a lane-keeping feature in an ADAS module. It can be assumed that HARA (hazard and risk analysis) for such lane keeping system concludes an ASIL B safety goal to prevent insufficient lateral adjustment that results in a lane/roadway departure. To determine when to steer the vehicle to keep it in the current lane, the ADAS module shall first determine the vehicle position and motion trajectory relative to the lane by recognizing the lane markings. To recognize the lane markings, the ADAS module can use AI/ML technology to classify whether a pixel is part of a lane or not. Usually, a large number of images will be used to train the model. Each image will be annotated to have pixels belonging to a lane, and pixels not part of a lane. After training, a mathematical model is generated, where a test image will be the input to the model, and the classification of each pixel (belonging to a lane or not) will be the output of the model.

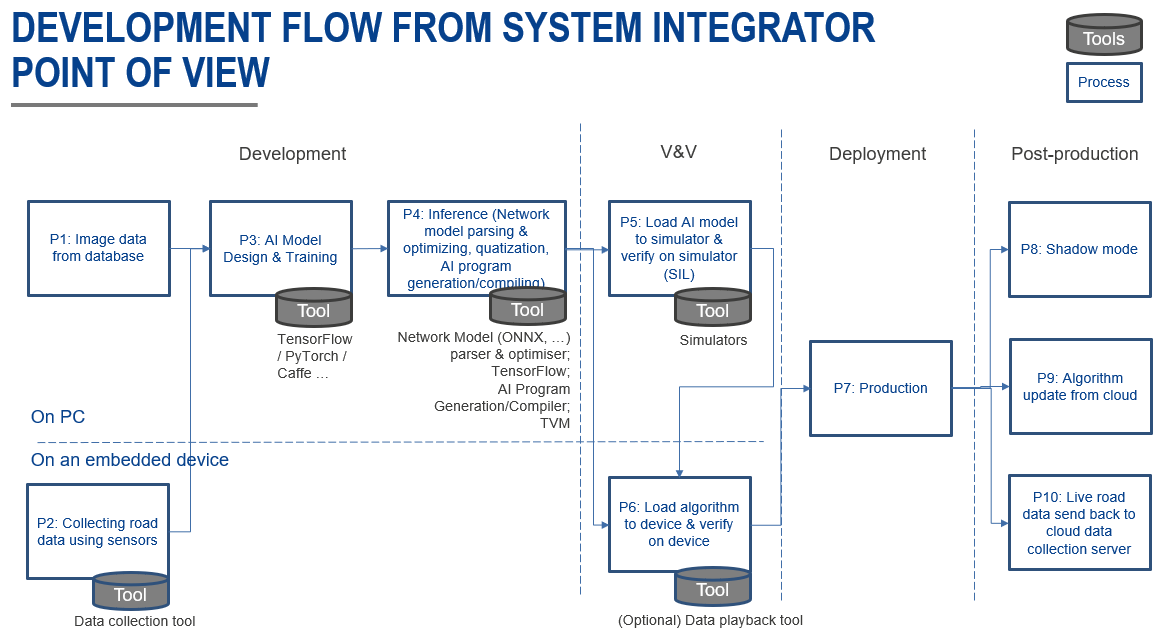

To develop such a model and translate it to machine executable code, the diagram below shows a sample development process:

The development flow can be categorized to four stages:

- development

- V&V (verification and validation)

- deployment

- post-production

The first stage is the development stage, and its starting point is data. Data can be from an existing database (P1), or can be collected using sensors with the support of data collection tools (P2). The data is usually split into a “training data set” to train the model, and a “testing data set” to evaluate the performance of the model. Developers will take the training data set and use it to design and train AI models, such as image segmentation, object classification, etc. (P3) The main tools involved here are tools like TensorFlow9, PyTorch10, Caffe11, and so forth. These are mainly open-sourced PC tools. Once the model is ready, the next step is Inference(P4). Inference involves network model parsing and optimizing, quantization, and AI program generation and compiling. It’s possible to use individual tools for each step in P4, but also possible to use one tool to realize all functions, such as TVM. It’s worth mentioning that the quantization was once performed after model training (post-training quantization), but it’s now possible to combine it with model training (quantization aware training). At this point, the model is now available for verification and adjustment, as necessary.

The second stage is the V&V stage. Typically, verification is done on either simulators (P5) or the actual hardware (P6). If using simulators, one should first load the developed model to a simulator, then verify it (P5). The simulator is the key player in this step. There are different levels of simulators supporting different needs. Some simulators (functional simulators) have a faster speed, but the accuracy of representing the hardware may be lower. Some simulators are a bit accurate (bit-accurate simulators), meaning they are accurate when compared to the hardware, but they may run slower. Simulators are good for repeated development cycles, where verification and feedback are used for model improvement. However, it’s not sufficient to just verify with simulators; verification must be done on the actual hardware eventually. If using actual hardware, one should first load the developed model to the actual hardware, then verify it (P6). Note that the verification can be performed in two ways. One way is with data playback – feed pre-recorded data, or even simulated image data back to the processing pipeline for verification. A data playback tool or an environment simulator can be used to assist in this step. The other method is with live road verification – for example, running the actual hardware in a drive campus or on the road. This can be very costly for OEMs.

After the model and software are ready for production, the process can proceed to the deployment stage (P7). This is where the software is loaded into the ECU and deployed onto a vehicle.

The last stage is the post-production stage. For AI/ML, development doesn’t stop at production. Due to the environment constantly updating, and new and improved models available every few months, the AI/ML models can be and must be updated and deployed more frequently. There are some OEMs adopting the “shadow mode” feature together with over-the-air (OTA) functions to facilitate the upgrade process14. The so-called “shadow mode” (P8) is a design where a separate piece of software with AI/ML models is running on the ECU in the road vehicle. This separate software can be the candidate for the next software upgrade. Running in shadow mode doesn’t cause any actuation. The shadow mode software will only calculate in the background, and the results are used for performance evaluation. The OTA assists in downloading software onto the road vehicle, as well as the software upgrade (P9). What’s unique about AI/ML development is that instead of traditional software requirements described in natural language, UML, or other descriptive format, the requirements of AI/ML development are very often represented as data. The reason is that data are used to describe the scenario and train the model. The profile of performance vs the amount of training data varies depending on the type of model. For most deep learning and neural network models, the performance continues to improve when the amount of data used for training increases8. The optimal amount of data used for training shall be what results in the best performance. To collect an adequate amount of the most up-to-date data, some test vehicles will be equipped with data collection functions, which sends the collected data back to the cloud (P10).

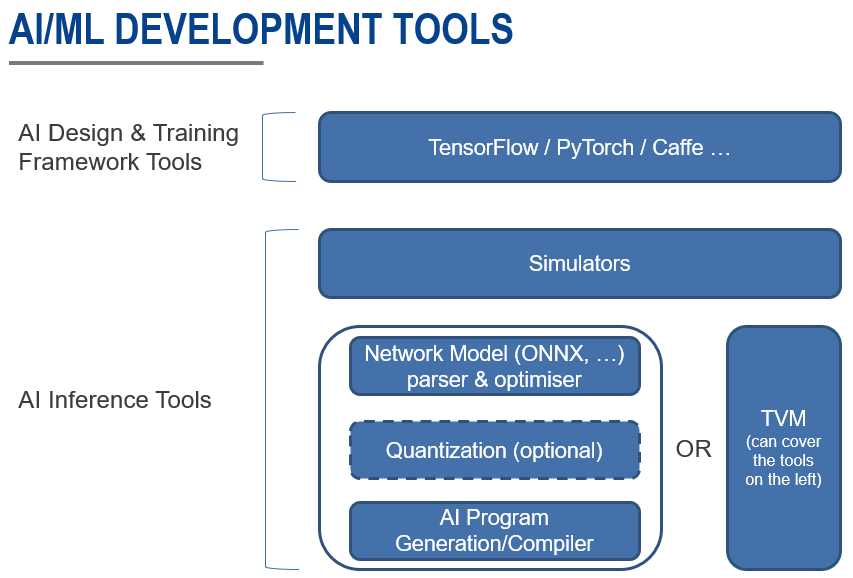

The description above is the general approach of developing AI/ML software for automotive applications. There are many tools assisting in the development of AI/ML models. The image below is a general view of some tools involved in different stages of AI/ML model development. Some tools are open-source, such as TensorFlow9, PyTorch10, TVM12; some are proprietary, such as certain simulators, AI generation tools, and compilers. The AI/ML technology is developing very quickly - there are new approaches and research available each day. The tools used for each stage are also evolving.

Now that we understand what the tools are, what they are used for, and how they are used, we need to consider compliance to ISO 26262 when using these tools in development. This helps the tool users to gain proper confidence in using the tool in a safety context, and also helps tool vendors provide proper verification/validation and evidence to support tool users in gaining that confidence.

One challenge is to determine a proper TCL for the tool. As required by ISO 26262, the software Tool Confidence Level shall be determined5 for each tool in the use case. This means that even for the same tool, the TCL can be different if used in a different context. As an example, simulators are usually used to do a quick sanity check of the AI/ML models. Actual verification and validating will be done on the hardware. In this case, the TI of simulators will be low (TI1), because the output of the simulator will not go into the final product and is not impacting the safety of the final product. However, in some systems, a full validation is not done with the hardware, but is partially done on the simulator, and partially done with the actual hardware. One benefit is that this makes development easier because it is less dependent on hardware. Another benefit is that with certain functional simulators running on high-computing power PCs, one can achieve faster processing speed than when running on physical hardware, therefore more data can be processed for verification. An equivalence study can be done to show that the simulator is adequately equivalent to the actual hardware, to claim the effectiveness of verification with the simulator. In this use case, because the quality of simulator affects the verification result and the quality of the final product, the TI will be deemed high (TI2). For a different use case, the same tool can have a different TCL. Therefore, the tool supplier must understand the highest TCL required by the market, as well as the highest ASIL safety goal of the application. With this information, the tool supplier can select the suitable tool qualification method for the tool. Renesas analyzes the possible market use cases and proposes assumed TCLs for all the tools Renesas develops and provides to customers. Renesas also provides functional safety support to answer questions about the assumed tool use case or the assumed TCL.

A second challenge is acquiring tool qualification for open-source tools. As mentioned previously, there are a considerable number of AI/ML tools that are open-sourced. With open-source tools, there is no quality control, let alone any tool qualification. If someone is using open-source tools within a safety context, and the analysis shows that the tool has TCL2 or TCL3, the qualification becomes an issue. As required by ISO 26262, regardless of the target safety goal ASIL, tool qualification is always required for TCL2 or TCL3 tools. There are several solutions the user can choose from:

- Validate the tool taking advantage of publicly available tool source code. Although there is no quality control for open-source tools, the benefit is that the source code is open to the public. This makes it possible for users to do validation on their own. However, this can be time-consuming and requires a lot of effort. There are some commercially available services and tools for these tool qualification activities.

- Another approach can be to adjust the use case to lower the TCL. For example, TensorFlow is a tool that can be used to train a CNN network model. The CNN models will be part of the final product and will affect safety. Therefore, the TI will be high (TI2). In one use case, 500 images will be used for training and 500 images will be used for testing. In this use case, the TD will be considered low (TD3) because with this limited number of images, it may not detect if the model is not working as expected. We will end up with a high TCL (TCL3), and proper qualification shall be performed. By lowering the TCL to TCL1, the tool qualification can be avoided. To bring the TD from TD3 to TD1, instead of 500 images, a much larger number of images can be used for training and testing. The TD of the adjusted use case can be considered significantly higher (TD1) because with a significantly greater image count, it is much more likely to detect any errors.

Obtaining tool qualification for open-source tools is usually difficult, but it can also be challenging for proprietary tools. Although proprietary tools are subject to a stricter quality control process, it doesn’t mean that all of them are automatically suitable for use in a safety context. ISO 26262:2018 part 8 clause 11.4.6 Qualification of a software tool2 defines requirements for obtaining tool qualification based on the TCL and target ASIL. For example, if one system integrator wishes to use a tool for an ASIL D safety requirement, and analysis shows that the TCL for this use case is TCL3, then according to clause 11.4.6, the integrator is highly recommended to use methods 1c and/or 1d in “Table 4 — Qualification of software tools classified TCL3” to present the tools capability for supporting such safety goal and use case. 1c is described as “Validation of the software tool in accordance with 11.4.9” and 1d is described as “Development in accordance with a safety standard”. A tool vendor can have a tool that undergoes a thorough development process to ensure quality, but it doesn’t automatically meet the qualification requirement defined by ISO 26262. It shall be further checked to ensure there’s no gap in meeting these requirements. For Renesas tools with assumed TCL2 or TCL3, Renesas defines the qualification method according to ISO 26262 recommendations and supports qualification to customers. The goal is to provide sufficient evidence to assist customers with development, product assessment and completing the product’s safety case.

In summary, in this blog, we first discussed the special characteristics of AI/ML tools when considering tool confidence. Then the development flow of AI/ML applications was introduced, as well as the tools involved and the stages in which these tools are engaged. Lastly, three challenges in gaining confidence for AI/ML-related tools were given. In conclusion, it is necessary to plan at an early stage which tools should be chosen to fit the development needs, as well as the safety expectation. Open-source tools and proprietary tools both have their pros and cons from all perspectives. Open-source tools can be more flexible, and sometimes more powerful because the development cycle is faster and more manpower contribution is available from the open-source software developer community. As a result, open-source tools can provide some unique features that are not found in proprietary tools. But when tool qualification is required, it’s very difficult to obtain for open-source tools. With proprietary tools, qualification is possible, although sometimes it’s not as flexible and often has limited scope. Choosing the right tool, especially for AI/ML-related applications, is important to ensure the safety of the product. Renesas proposes recommended tools for each generation of Renesas products. Renesas also works with 3rd party tool vendors to create alternative tool solutions for customers. For the tools provided by Renesas, use cases and assumed TCLs are analyzed, appropriate qualification is fulfilled as part of the development, and appropriate support for qualification is provided to customers.

References:

[1] Renesas Functional Safety Support for Automotive (3) – Applying the ISO 26262 Standard to SEooC Software

[2] ISO 26262:2018-8 11 Confidence in the use of software tools

[3] Artificial Intelligence | Wikipedia

[4] Artificial Intelligence Reshaping the Automotive Industry

[5] ISO 26262:2018-8 11.4.5 Evaluation of a software tool by analysis

[6] UL Standard | UL 4600

[7] ISO/IEC DTR 5469 — Artificial intelligence - Functional safety and AI systems

[8] Serin, Gokberk., Sener, Batihan., Ozbayoglu, Murat., Unver, Hakki Ozgur. (2020/07/01) The International Journal of Advanced Manufacturing Technology: Review of tool condition monitoring in machining and opportunities for deep learning

[9] tensorflow.org

[10] pytorch.org

[11] caffe.berkeleyvision.org

[12] tvm.apache.org

[13] CARIAD: Our road to automated driving