In the previous post we gave some explanation about the 10MBit/s automotive protocols CAN XL and 10BASE-T1S. Now we will focus on potential use cases and what the implications to hardware and software will be.

Application Examples of 10Mbit Protocols

CAN XL or 10BASE-T1S can be used as a replacement for existing CAN FD or other networking protocols where the application requirement exceeds the provided bandwidth. There are also new use cases where these protocols may be used exclusively.

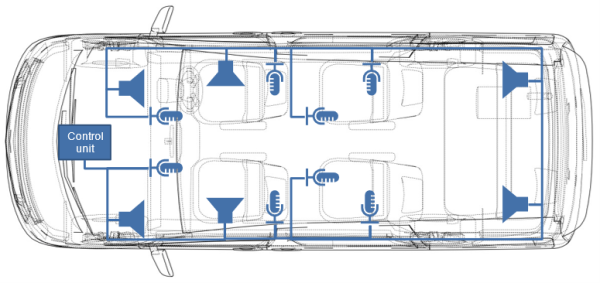

One example is connecting microphones and speakers for active noise cancellation, road noise cancellation or e-Call applications. For those applications, several microphones and speakers are placed in the cabin. The network traffic is in a range that CAN FD cannot provide. With the tendency to move away from communication protocols specifically developed for a single application, more generalized protocols such as CAN XL and Automotive Ethernet are preferred by OEMs.

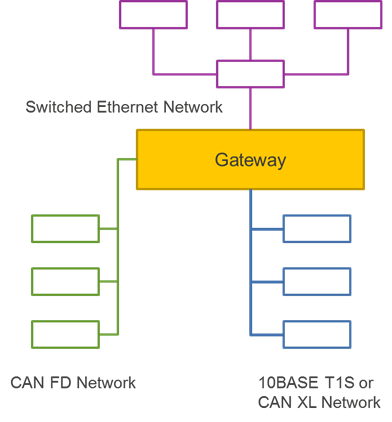

In a similar way, low bandwidth demanding sensors are connected using existing protocols forming sub-networks organized in zones or domains. Those sub-networks are typically connected through Gateways to the remaining in-vehicle network (IVN) which is typically using Ethernet connections in the range of 100MBit/s or more (Figure 2).

Already, today a gateway ECU that needs to transfer CAN messages from one channel to another is a complex component. Adding a protocol that provides more bandwidth and longer payload will further increase the complexity and require obviously higher processing performance when using the same hardware and software strategies as of today.

A gateway ECU should just forward messages or PDUs but does not manipulate data content. In practice, gateway ECUs modify data and have conditional forwarding rules. Those extensions to gateway modules will be avoided in the future to follow the approach of a message-based communication concept or service-oriented architecture (SOA). In the following, we will discuss based on pure message forwarding gateways.

Ethernet Gateway Functions for 10MBit Protocols

The role of the analyzed gateway module is to transfer messages from one protocol to another without changing the content of the message.

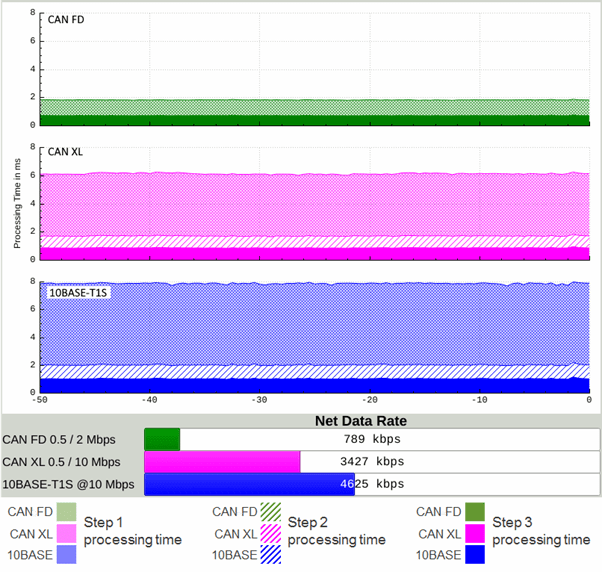

Two factors influence the CPU resources required to perform gateway operations. First, the payload length which creates a net-data rate that needs to be handled. Second, the event rate that triggers the gateway process. Just looking to the bus utilization (bus load) is not enough as it is a combination of activity on the bus and event rate. Assuming a gateway ECU that needs to upstream any given protocol to an Ethernet interface, three basic steps are necessary with different dependencies to these two factors:

Step 1: Transfer the messages from the receiving interface to the memory. CPU resource utilization depends on the amount of data to be transferred in a time interval, independent if there are many short frames or a few long frames on the bus. However, the net-data rate determines this task.

Step 2: Construct the target Ethernet message. In case the source protocol is already an Ethernet message (e.g., 10BASE-T1S), simple switching function is sufficient. In case the source protocol is not Ethernet, the protocol dependent ingress header has to be replaced by an Ethernet header. This task can be significantly complex if for instance protocol with lower payload length are used and a transport protocol shall be removed before providing it to the upstream interface. The CPU load depends on the processed payload size as well as on the event rate.

Step 3: Make the constructed Ethernet message available to the target interface and initiate the transmission. Assuming the Ethernet interface operates on pointers in the memory, the event rate of transmissions determines the required CPU load.

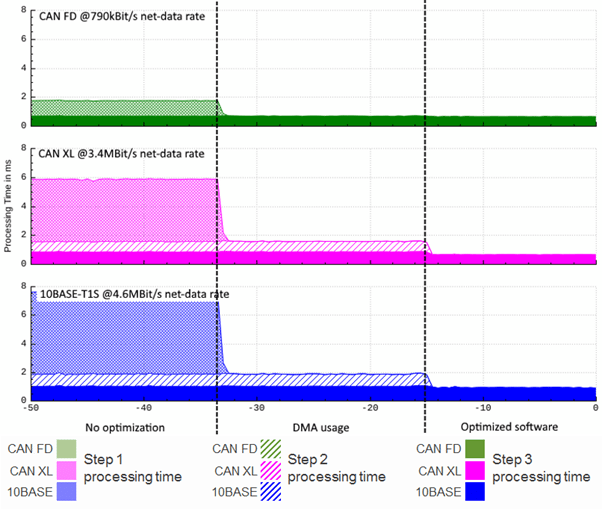

Figure 3 shows the over 1 second accumulated CPU processing time of a simple CAN FD, CAN XL and 10BASE-T1S to Ethernet gateway for 50% bus load and 256 bytes of payload.

Looking at the required processing time, it appears to be that 10BASE-T1S requires more CPU time than CAN XL to process the traffic on the bus. In fact, the same amount of processing time per payload is required. We see from the net-data rate that this is around 30% higher for 10BASE-T1S than for CAN XL. The processing time for 10BASE-T1S differs in the same region from CAN XL. The same considerations must be applied when looking at CAN FD which has a relative low processing time but also a very low net-data rate.

From today’s gateway applications and ECU designs, it is evident that with the current event- and data rates latency requirements are difficult to meet. With protocols offering more bandwidth it will be even harder to meet the targets. Assuming that multiple CAN and Ethernet channels are connected, the processing time is 3 to 4 times higher than today with the same busload. Solutions need to be developed to overcome this situation.

Gateway Optimization in Hardware and Software

Looking at the graph the most obvious solution is already shown; remove the burden of moving data from the CPU to the memory. This is not a typical CAN approach. Traditionally, CAN uses a local IP memory for local transmit and receive buffers. This was okay for classical CAN and acceptable for CAN FD. With the increased amount of payload combined with a higher data rate, this approach will change. Several implementations of CAN allow already data transfer by system DMA that needs to be programmed and configured. This approach is rarely used because DMAs are difficult to use in an Autosar environment. In the future, the DMA function will be part of the IP and fully transparent for the software. The received data “appears” in FIFOs and buffers in defined system memory and will be directly accessible; the same for transmit data. This concept is already used for Renesas’ Ethernet interfaces and will be expanded to the CAN XL protocol controller. The effect of such simple enhancement is shown in Figure 5.

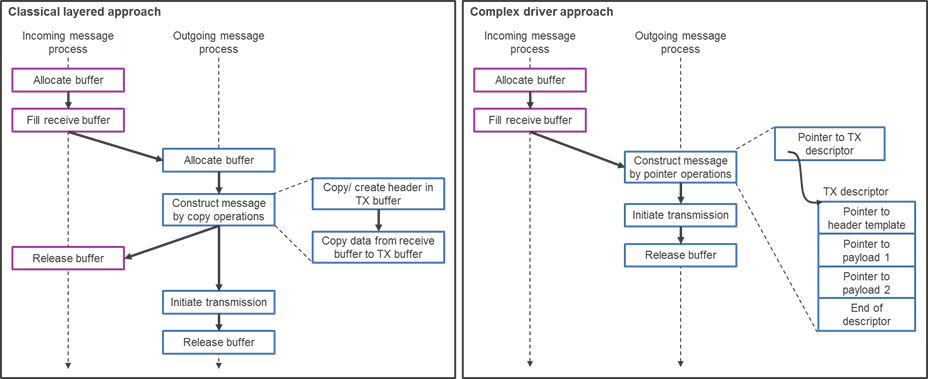

The second process in the routing process that requires attention is the buffer handling and information exchange process between the incoming CAN XL/ 10BASE-T1S messages and outgoing 100BASE-T1 messages.

A typical, layer-oriented implementation would provide two processes (left-hand side Figure 4) where the incoming process reserves a buffer from its receive buffer pool and inform the outgoing message process about a pending message for transfer after the buffer got filled. Because of the independent structure and processing, the received message needs to be transferred into a newly allocated buffer for further processing. To construct the outgoing message a new transmission header has to be prepared and the data from the receive buffer needs to be copied after the header to the new buffer. After this copy operation, the original buffer can be released by the incoming message process. The allocated transmission buffer will be released back into the pool for outgoing messages after the transmission is completed. This can be done either by waiting or as a scheduled task.

There are two main disadvantages to this method. First, there is inter-process communication which has to be minimized even in cases like here, when there is no dependency or waiting condition before proceeding. Second, pointer operations should be preferred over data copy operations.

The right-hand side of Figure 4 shows in a simplified process as complex device driver where the incoming and outgoing communication interface share a common pool of buffers. This eliminates the need for double allocation and release of buffers and the inter-process communication. The extended pointer operation support in Renesas’ Ethernet communication interfaces allows the flexible creation of Ethernet messages without any copy operation and enables the usage of header templates. Such structure also allows to aggregate messages from the incoming interfaces, line them up, and easily create an Ethernet message for example following the IEEE 1722 tunneling protocol.

Figure 5 shows the effect on CPU utilization when optimizing hardware and software. Renesas has prepared a demonstration set where CAN XL and 10BASE T1S are transferred to a 100BASE-T1 Ethernet backbone. For simplification and fairness of comparison, the payload of CAN XL contains an IPv4 header already. So, the gateway function for CAN XL is reduced in adding the Ethernet L2 header. For 10BASE-T1S just the function of a software switch is realized.

On the most left-hand side of the graph, we see the CPU processing time without any optimization.

In the middle part, DMA transfers are used to transfer the data from the communication interface to the system memory. The creation of the new message is still done by copy operations and dynamic header creation.

On the right-hand side of the graph, we use the optimized software flow using a complex driver using a common buffer pool and pointer operations for header templates and payload data.

Conclusion

The 10MBit communication protocols will cause an additional load to gateway CPUs. With a clever software approach and using hardware features, the required CPU performance does not increase in the same way as the bandwidth and net-data rate increase.

The Ethernet communication interfaces from Renesas provide automatic data transfer and support pointer operations for complex message assembly in system memory. The next generation CAN XL interface will move in the same direction.

Both, 10BASE-T1S and CAN XL, will have their application domains that they will dominate. They are developed by trustful standardization bodies and have supporters in the industry providing products and solutions, including Renesas.